Page 1 of 1

Unspan two hard drives

Posted: 2008-04-08 11:23pm

by Dominus Atheos

I just installed Vista on a new computer, but for some reason during the install process, the hard drive I wanted to install it too didn't show up, so I was forced to install it on a different hdd. I've fixed that issue and now I want to re-install vista on the formerly broken drive.

The problem comes from the fact that before I decided I wanted to do the installation over again the right way, I instituted a workaround that would have given me the same features. I have 3 HDDs, two 500gbs and one 160gb. What I originally wanted to do is install my OS on the 160gb, and then span the two 500gb drives in windows so I get one 1tb volume. But since the vista installer couldn't recognize the 160gb drive, I created a 150gb partition on one of the 500gb drives, and installed vista there. Then I fixed the issue with the 160gb drive and spanned it with the other 500gb drive, creating a 660gb volume.

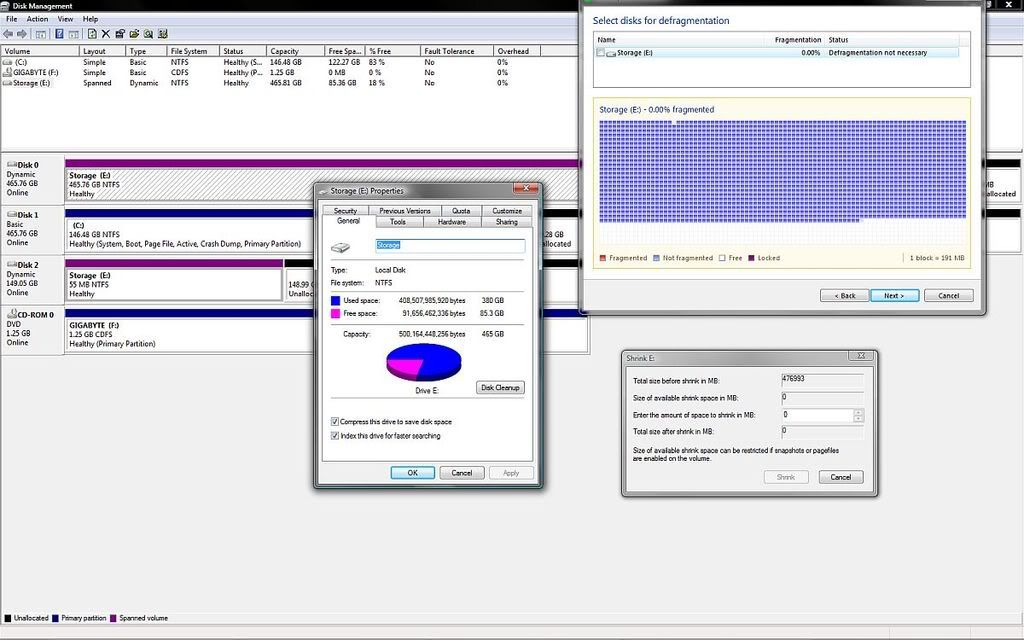

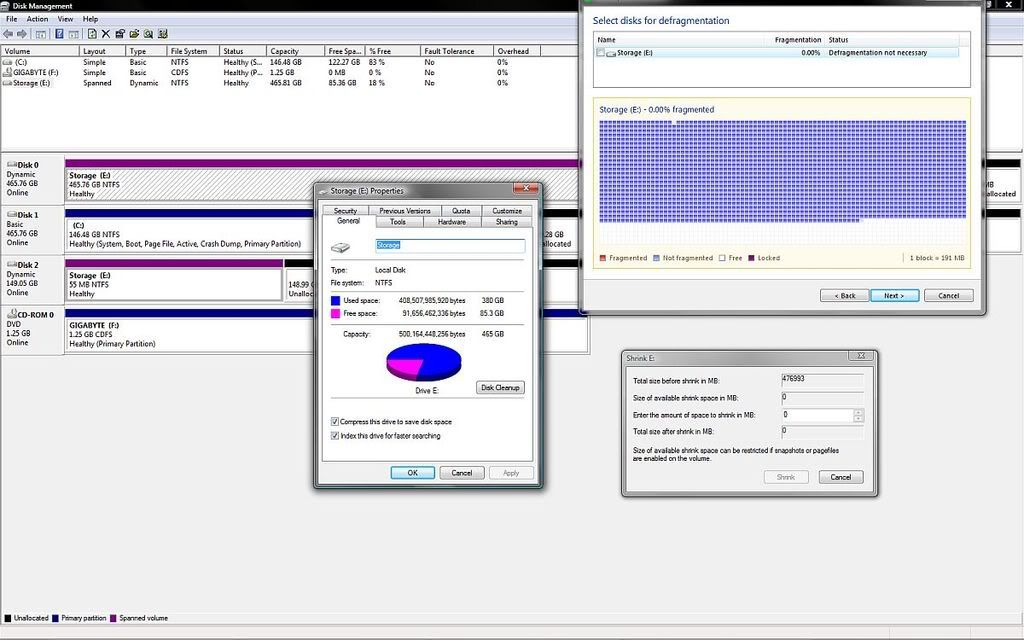

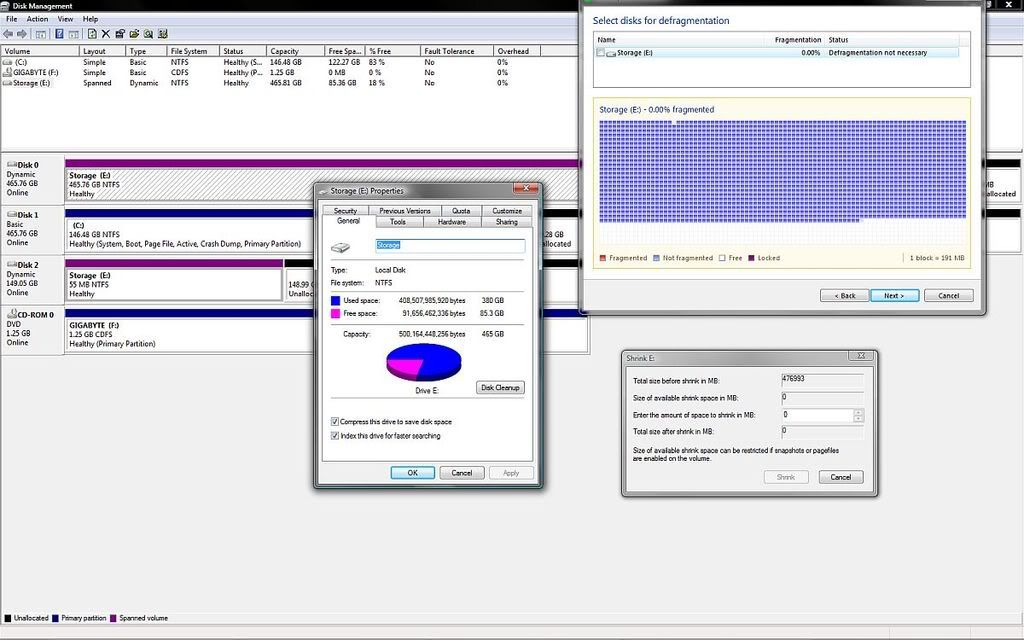

But now that I decided to do the whole thing over again, I want to undo the spanning of the two drives, and have two separate volumes (or just one volume that's just on the 500gb drive) so I can install vista to the 160 drive without wiping out the data I have on the 500gb drive, but vista doesn't give me on option to undo the span. I tried shrinking the volume, but it left 55 megs on the 160 drive. I thought it might be fragmented, so I ran a defragmenter on it, but that didn't change anything. Help?

Screenshot of what I'm dealing with:

If you need anymore information, just ask.

Posted: 2008-04-08 11:29pm

by phongn

You can't remove disk spanning without destroying your data.

Posted: 2008-04-08 11:53pm

by Uraniun235

oh my god why would you span hard drives like that, I mean I guess it made sense back in 1997 when hard drives were like 4GB a piece but jesus

Honestly if you want a terabyte-sized volume get a terabyte drive already, it's a hell of a lot cooler to know you've got a single drive that holds a whole god damn terabyte than to know you've got two hard drives forming a single volume that could fly apart at any moment because once one drive dies the whole thing is pooched.

As for what to do: Move all of the data you want to keep off of the spanned volume, then reformat the drives more sensibly. "But but but I don't have enough room for my FILEZ" well then it's time to either buy some DVD blanks or another hard drive. (Or have a LAN party and shuffle files off to other people's computers, reconfigure your system, and then pull your files back.)

Posted: 2008-04-09 12:06am

by Dominus Atheos

Uraniun235 wrote:oh my god why would you span hard drives like that, I mean I guess it made sense back in 1997 when hard drives were like 4GB a piece but jesus

Honestly if you want a terabyte-sized volume get a terabyte drive already, it's a hell of a lot cooler to know you've got a single drive that holds a whole god damn terabyte than to know you've got two hard drives forming a single volume that could fly apart at any moment because once one drive dies the whole thing is pooched.

Because the 500gb drive I was using was getting full? Why the hell should I buy a 1tb drive when I have a (refurbished) 500gb drive sitting in a closet?

And why the hell should a JBOD array lose all the data on it if one drive stops working? I mean, of course I'll have to use data recovery software to get it out, but I'd rather risk having to do that then spend $150 on a hard drive.

As for what to do: Move all of the data you want to keep off of the spanned volume, then reformat the drives more sensibly. "But but but I don't have enough room for my FILEZ" well then it's time to either buy some DVD blanks or another hard drive. (Or have a LAN party and shuffle files off to other people's computers, reconfigure your system, and then pull your files back.)

In case you can't tell from the screenshot, I have more then enough free space to do that, I was just hoping there was a simple dos command I could run to separate them.

Posted: 2008-04-09 12:26am

by phongn

Dominus Atheos wrote:And why the hell should a JBOD array lose all the data on it if one drive stops working? I mean, of course I'll have to use data recovery software to get it out, but I'd rather risk having to do that then spend $150 on a hard drive.

Because you've just ripped a chunk out of the filesystem? Because the MFT might be on the dead drive?

Posted: 2008-04-09 12:26am

by RThurmont

You do realize that you can, with a much higher degree of safety, use a separate partition on the second disk? Heck, with Linux, by default, most distros create at least three separate partitions, a swap partition (used by the kernel for swapping out memory during high load conditions, on Windows, spare space in the NTFS is used for this purpose IIRC), a root partition (that stores most programs and globally shared data) and a home partition (that stores most user-specific data, such as documents).

Some consider this approach to be safer than having one single, monolithic partition on each disk. I think almost all would consider that either approach is safer than spanning a filesystem across multiple volumes, which strikes me as utterly retarded.

As an aside, I'm not too much of a fan of RAID, as I see it, the tendancy is for RAID to backup a few files you need and many files you don't need against an unlikely occurance (physical failure of the HD) as opposed to backing up files that are actually important against both unlikely and likely occurances (such as viruses, malware, FS corruption due to OS irregularity, accidental deletion), which is what you get with a proper backup regime.

Posted: 2008-04-09 12:39am

by Ariphaos

Dominus Atheos wrote:And why the hell should a JBOD array lose all the data on it if one drive stops working? I mean, of course I'll have to use data recovery software to get it out, but I'd rather risk having to do that then spend $150 on a hard drive.

I have no idea how Vista manages spanned disks - if it keeps fragmentation to a single disk and has a backup of the filetable on each disk, there should be no real flaws.

Still, it's not like you were running short on drive letters, why are you spanning them?

Posted: 2008-04-09 12:39am

by phongn

RThurmont wrote:You do realize that you can, with a much higher degree of safety, use a separate partition on the second disk? Heck, with Linux, by default, most distros create at least three separate partitions, a swap partition (used by the kernel for swapping out memory during high load conditions, on Windows, spare space in the NTFS is used for this purpose IIRC), a root partition (that stores most programs and globally shared data) and a home partition (that stores most user-specific data, such as documents).

Windows automatically grows the pagefile as needed; you can also put it on multiple drives if you wish, or make it statically-sized (emulating the UNIX model).

As an aside, I'm not too much of a fan of RAID, as I see it, the tendancy is for RAID to backup a few files you need and many files you don't need against an unlikely occurance (physical failure of the HD) as opposed to backing up files that are actually important against both unlikely and likely occurances (such as viruses, malware, FS corruption due to OS irregularity, accidental deletion), which is what you get with a proper backup regime.

HD failure is not unlikely, but RAID is not backup at any rate. RAID and a proper backup schedule are complementary ideas.

Posted: 2008-04-09 12:48am

by Uraniun235

Dominus Atheos wrote:Because the 500gb drive I was using was getting full? Why the hell should I buy a 1tb drive when I have a (refurbished) 500gb drive sitting in a closet?

And why the hell should a JBOD array lose all the data on it if one drive stops working? I mean, of course I'll have to use data recovery software to get it out, but I'd rather risk having to do that then spend $150 on a hard drive.

I didn't say "if you want a terabyte of space you should buy a terabyte drive", I said "if you want a terabyte volume you should buy a terabyte drive".

Most documentation I turn up with a quick search says all the data in a spanned array is pooched if a disk in the array fails. Pretty sure the burden is on you to show otherwise.

Also, you can have a terabyte of space

without risking your data to a spanned array; you can have

two separate volumes. A terrible burden, to be sure, but then you're not posting your ridiculous shenanigans on the internet to be laughed at.

RThurmont wrote:Some consider this approach to be safer than having one single, monolithic partition on each disk.

Maybe for the disk the OS resides on, but what about disks purely used for storage? It seems redundant to have anything but a single partition on a disk intended just to house a shitload of data.

Posted: 2008-04-09 03:45am

by RThurmont

Maybe for the disk the OS resides on, but what about disks purely used for storage? It seems redundant to have anything but a single partition on a disk intended just to house a shitload of data.

Suppose the OS crashes during a read/write operation on said filesystem? I've seen this cause the corruption of a pre-installed FAT32 filesystem on a WD USB HD. Its less likely with a sane, journaled filesystem like ext3, but still possible. Redundancy is good. Also, remember, most OSes do not handle multiple partitions in the stupid, broken, Windows manner. On a UNIX system, it really doesn't matter how many partitions you have, since you can access everything in a consistent manner.

So if I have an ext3 partition on dev/sda3, another on /dev/sdb1 and another on /dev/sdb2, I can mount all of them to one or more directories, such as /media/somerandomstoragedevice, or /mnt/1. Plan 9's implementation of junction directories, which can span networks, is a particularly elegant implementation of this.

Posted: 2008-04-09 04:01am

by phongn

RThurmont wrote:Suppose the OS crashes during a read/write operation on said filesystem? I've seen this cause the corruption of a pre-installed FAT32 filesystem on a WD USB HD. Its less likely with a sane, journaled filesystem like ext3, but still possible. Redundancy is good.

Then the whole drive could be in an inconsistent state anyways?

Also, remember, most OSes do not handle multiple partitions in the stupid, broken, Windows manner. On a UNIX system, it really doesn't matter how many partitions you have, since you can access everything in a consistent manner.

It doesn't matter on Windows either.

So if I have an ext3 partition on dev/sda3, another on /dev/sdb1 and another on /dev/sdb2, I can mount all of them to one or more directories, such as /media/somerandomstoragedevice, or /mnt/1. Plan 9's implementation of junction directories, which can span networks, is a particularly elegant implementation of this.

There's nothing stopping you from mounting a volume on a directory in Windows.

Posted: 2008-04-09 11:36am

by Pu-239

UnionFS is nice on Linux (or MacOS via FUSE), lets you merge two directories or volumes into 1, without compromising data integrity in case one of the drives die (since they're still two seperate filesystems underneath). I'm not sure if they've made it not stupid so that it dumps files onto the appropriate partition when the other gets full, I've only used it for my 32bit chroot on Ubuntu 64, but I guess that's not really serious, since you then just give higher priority to the empty one.

Also, the problem w/ splitting partitions like RThurmont suggests is you can't exactly predict how space should be allocated- there's LVM, but Ubuntu doesn't support that on the standard install

(you have to use the alternate, which has no livecd).

Does anyone know if RAID-Z on Solaris really allows a JBOD w/ redundancy? I've heard bad things about ZFS's reliability though...

RThurmont, your constant windows/macos bashing is kind of annoying sounding even to Linux users....

Posted: 2008-04-09 12:11pm

by Uraniun235

Suppose the OS crashes during a read/write operation on said filesystem? I've seen this cause the corruption of a pre-installed FAT32 filesystem on a WD USB HD. Its less likely with a sane, journaled filesystem like ext3, but still possible. Redundancy is good.

I don't follow. Why does this mean I want multiple partitions on the same (secondary, data storage only) hard drive? Your post only seems to suggest "don't use FAT32" - which I don't anyway, because (among other things) 4GB file size limits are not my friend.

Posted: 2008-04-09 12:25pm

by Pu-239

He seems to think that if the OS crashes, it will corrupt an entire parition, so best to keep them small and not taking up the entire drive. Of course, if the OS crashes that badly, as phong mentioned, it has a decent chance of corrupting all partitions anyway.

Frankly I haven't really experienced any OS going tits up and seriously corrupting anything, unless the power was yanked somehow (had my XFS-formatted drive get serious corruption after yanking the power due to impatience. I now just use ext3- performance differences between filesystems are neglible, and data integrity and reliability is far more important.

Posted: 2008-04-09 01:27pm

by TheFeniX

RThurmont wrote:As an aside, I'm not too much of a fan of RAID, as I see it, the tendancy is for RAID to backup a few files you need and many files you don't need against an unlikely occurance (physical failure of the HD) as opposed to backing up files that are actually important against both unlikely and likely occurances (such as viruses, malware, FS corruption due to OS irregularity, accidental deletion), which is what you get with a proper backup regime.

RAID isn't about data integrity or safety, that's what a backup is for. RAID is about uptime and the ability to have your PC still function in the event of a HDD loss. With the exception of RAID 0 (which isn't even really RAID), the complete loss of 1 (or 2 depending on the RAID setup) physical HDD will not cause the computer to go offline and will still allow access to services on that computer.

It allows you to keep working with a computer (mainly servers) while you affect repairs. Anyone who thinks RAID is a backup solution will mostly likely be out of a job once something actually does happen and data is lost.

Posted: 2008-04-09 03:28pm

by phongn

Pu-239 wrote:Does anyone know if RAID-Z on Solaris really allows a JBOD w/ redundancy? I've heard bad things about ZFS's reliability though...

Er, no. RAID-Z is most similar to RAID-5 and RAID-6 in nature, so you'd not get redundancy with a JBOD configuration.

Posted: 2008-04-09 06:18pm

by Pu-239

Ah, was under the impression ZFS/RAID-Z allows throwing a bunch of hetrogenous disks together and have some kind of redundancy.

Is having two hard drives with one unmounted and spun down, and periodically synced with the other one an okay backup solution? Sure, something might fry the box, the house may burn down, whatever, but the likelyhood of corruption of an unmounted volume is pretty low, yes?

Also, is it better to spin up such a hard drive to sync once a day, or just keep it running all the time?

Posted: 2008-04-09 06:30pm

by Xon

TheFeniX wrote:RAID isn't about data integrity or safety, that's what a backup is for. RAID is about uptime and the ability to have your PC still function in the event of a HDD loss.

RAID is also about pure performance when you just need a huge amount of thoughput to keep everything going. You can have more things accessing the array at once without degrading performance.

Posted: 2008-04-10 05:09pm

by RThurmont

Also, the problem w/ splitting partitions like RThurmont suggests is you can't exactly predict how space should be allocated- there's LVM, but Ubuntu doesn't support that on the standard install (you have to use the alternate, which has no livecd).

Well, the Ubuntu live CD installer (Ubiquity) sucks, anyway. That said, I don't use LVM that much myself (although I do have a RHEL5 clone installed onto it). I somewhat prefer the simplicity and interoperability of old fashioned DOS extended partitions (this allows for multiple Linux and Windows installs to easily coexist on the same HD, and you can use the spare primary partition slots for FreeBSD, Plan9 or another OS that doesn't like a logical partition).

Yes, I do admit that if an OS goes down hard, it could corrupt the entire disk, but I'd say its quite a bit more likely to mess up the partition its actively using.