The Word “Singularity” Has Lost All Meaning

Moderator: Alyrium Denryle

- NecronLord

- Harbinger of Doom

- Posts: 27384

- Joined: 2002-07-07 06:30am

- Location: The Lost City

Also, I love the idea of cognative modification for entertainment. I'm definately stealing that one and adding it to my list of ideas to put in some sci-fi somewhere...

And it appears I owe you an apology. Some people 'round here apparently don't get the preformance difference...

And it appears I owe you an apology. Some people 'round here apparently don't get the preformance difference...

Superior Moderator - BotB - HAB [Drill Instructor]-Writer- Stardestroyer.net's resident Star-God.

"We believe in the systematic understanding of the physical world through observation and experimentation, argument and debate and most of all freedom of will." ~ Stargate: The Ark of Truth

"We believe in the systematic understanding of the physical world through observation and experimentation, argument and debate and most of all freedom of will." ~ Stargate: The Ark of Truth

- Starglider

- Miles Dyson

- Posts: 8709

- Joined: 2007-04-05 09:44pm

- Location: Isle of Dogs

- Contact:

Greg Egan features it in 'Diaspora'; one of the main characters takes another character to see a piece of performance art that can only be properly appreciated by temporarily modifying one's mental architecture to give everything much greater emotional significance. This is achieved via an 'outlook', which is a kind of 'cognitive overlay'; in the upload-and-AI society that most of the novel takes place in use of these is very common, both temporarily and semi-permanently. Egan also illustrates the concept of falling into a mental attractor when one character adopts a 'toxic' self-reinforcing outlook that essentially irreversibly turns them into a nihilist (though it would of course be reversible if that society hadn't enshrined the concept of cognitive independence).NecronLord wrote:Also, I love the idea of cognative modification for entertainment. I'm definately stealing that one and adding it to my list of ideas to put in some sci-fi somewhere...

While rather dry 'Diaspora' is essential reading for anyone seriously interested in AI or the Singularity. Egan still makes a few serious AI mistakes, but he's by far the best fiction author I've seen deal with the subject.

- Ariphaos

- Jedi Council Member

- Posts: 1739

- Joined: 2005-10-21 02:48am

- Location: Twin Cities, MN, USA

- Contact:

Holy crap, you have a source for that? One synapse per neuron on average? Or are you mixing up your math and thinking there are only 100 million neurons in the human brain? You appear to be off by three orders of magnitude. But, even then, I've always been under the impression that the average synapses per neuron was well over a thousand, I thought it was ten thousand (what my AI professor who worked in medical brain studies told us) until the reports talking about simulating the mouse brain suggested it was significantly higher.Starglider wrote:Even taking your upper end figure, that's only 3E15ish FLOPS. 1E18 FLOPS works out to 10 billion FLOPS/neuron, which is extremely high. Best estimates for the number of synapses in the brain are actually about 1E11, which would be 2E13ish ops if every single neuron was firing constantly, which of course never happens.

- Starglider

- Miles Dyson

- Posts: 8709

- Joined: 2007-04-05 09:44pm

- Location: Isle of Dogs

- Contact:

This is my mistake; I was cribbing from an earlier post of mine to save time, and I rushed it and got the 'theoretical (silly) maximum' and 'actual lower limit' numbers mixed up. Which was stupid. I'll do it again from the data:Xeriar wrote:Holy crap, you have a source for that? One synapse per neuron on average? Or are you mixing up your math and thinking there are only 100 million neurons in the human brain? You appear to be off by three orders of magnitude.

The brain has roughly 10^11 (100 billion) neurons and 10^14 (100 trillion) synapses. The average firing rate for an excited neuron in an active microcolumn is around 200 Hz. The discrepancy comes from your ludicrous assumption that every neuron in the brain is firing at maximum rate all the time. In actual fact somewhere between 0.01% and 1% of neurons are firing at any given moment, which tallies with an estimated maximum aggregate sustained firing rate of 0.16 spikes/second/neuron based on observed metabolic efficiency and nutrient/oxygen demands in mammal brains. An aggregate sustained firing rate of 0.2/second equates to 20 billion neuron firing events and 20 trillion synpatic events per second, for a human brain.

The simplest useful computational model for asynchronous neuron firing is each firing event adding the current synapse weight to the current level of activation of each target neuron, after applying some kind of time decay function to that activation level, then firing the target neuron if it exceeds a threshold. Optimised code won't necessarily do it that way but 2 FLOPs per affected synapse per neuron fire event is the practical minimum (applied across the vector of target neurons) - 3 in fact for minimal modelling of relative dendrite delays (which also puts some constraints on parallelism, but you can deal with those with static optimisation). This is a lower bound of 6E13 trillion operations per second (my estimate of 2E13 was for this rate of synapse events but your assignment of one floating-point op each, which is unrealistic even for a lower bound). Bumping that to 17 operations per synapse event (for a moderately detailed dendrite tree model with that) event gives 1 petaFLOPs. That is around average of 10,000 FLOPS per neuron, not per synapse, (sorry about that). For a very detailed neuron model that models the depolarisation and recovery of each segment of the dendrite tree separately, you are looking at about 10 petaflops.

As usual that can be reduced by any management logic that can accurately predict which regions of the brain are particularly relevant at any given time; less relevant chunks can be downgraded to bulk (e.g. microcolum scale) modelling for a major computational saving. This level of understanding is probably a decade further away than simple bulk replication of an entire human brain. All the ancillary stuff such as chemical influences on synapses and dendrite growth are complex to model but not a significant computational drain compared to the primary simulation, because they take place on such long timescales by comparison. I'd note that I am not actively studying brain modelling myself - but I do correspond with people who are and try to read the most important papers.

The average varies with the brain region and particularly cortical layer; the input and output neurons for a microcolumn are much more connected than the central neurons. Each stage in the visual processing chain has lots of bulk pattern recognition neurons and then a much smaller number that send projections to other regions (forwards and backwards). However the global average is still around 10^3.But, even then, I've always been under the impression that the average synapses per neuron was well over a thousand, I thought it was ten thousand (what my AI professor who worked in medical brain studies told us) until the reports talking about simulating the mouse brain suggested it was significantly higher.

- Ariphaos

- Jedi Council Member

- Posts: 1739

- Joined: 2005-10-21 02:48am

- Location: Twin Cities, MN, USA

- Contact:

This suggests to me it's about five times that, and wikipedia suggests it is ten times -that-, according to a source eight years later. While I can't find a non-Wikipedia based source, it's closer to what my AI course went over, which suggested a quadrillion synapses.Starglider wrote:The average varies with the brain region and particularly cortical layer; the input and output neurons for a microcolumn are much more connected than the central neurons. Each stage in the visual processing chain has lots of bulk pattern recognition neurons and then a much smaller number that send projections to other regions (forwards and backwards). However the global average is still around 10^3.

Sorry, I was under the impression that the firing rate significantly higher (300 hertz versus 200 and a somewhat higher percentage), no problem in conceding that.The brain has roughly 10^11 (100 billion) neurons and 10^14 (100 trillion) synapses. The average firing rate for an excited neuron in an active microcolumn is around 200 Hz. The discrepancy comes from your ludicrous assumption that every neuron in the brain is firing at maximum rate all the time. In actual fact somewhere between 0.01% and 1% of neurons are firing at any given moment, which tallies with an estimated maximum aggregate sustained firing rate of 0.16 spikes/second/neuron based on observed metabolic efficiency and nutrient/oxygen demands in mammal brains. An aggregate sustained firing rate of 0.2/second equates to 20 billion neuron firing events and 20 trillion synpatic events per second, for a human brain.

err, I was being very rough due to the rather finicky nature of flops, but if you're going to do this you also need to consider what a given FLOP does, and how many bits the operation takes. A synapse is roughly five bits, (~20 discrete states), and then you work from there based on what the cell does given all the numbers it has to add up.-snip FLOP discussion-

The above is a bit moot and I'm more referring to your zettaflop per watt statement, as this means a complete operation has to occur in a hundred or so bit changes, which is pushing things when comparing them to neural cell activity, especially considering the parallelism desired and that you are talking about a rather basic analysis of the human neural cell.

- Starglider

- Miles Dyson

- Posts: 8709

- Joined: 2007-04-05 09:44pm

- Location: Isle of Dogs

- Contact:

Honestly no one knows for sure. People just slice up (or biopsy) brains, stick small regions under a microscope, count what they can see and multiply. The numbers vary greatly. A lot of samples show low synapse and even neuron density; these tend to get ignored as outliers as the paper I just linked noted. A quadrillion synapses is reasonable as a worst case estimate. At the current rate of computing progress, that's about a five year delay in human-upload-equivalence at any given power/speed.Xeriar wrote:This suggests to me it's about five times that, and wikipedia suggests it is ten times -that-, according to a source eight years later. While I can't find a non-Wikipedia based source, it's closer to what my AI course went over, which suggested a quadrillion synapses.

Starglider wrote:

The average varies with the brain region and particularly cortical layer; the input and output neurons for a microcolumn are much more connected than the central neurons. Each stage in the visual processing chain has lots of bulk pattern recognition neurons and then a much smaller number that send projections to other regions (forwards and backwards). However the global average is still around 10^3.

This suggests to me it's about five times that, and wikipedia suggests it is ten times -that-, according to a source eight years later. While I can't find a non-Wikipedia based source, it's closer to what my AI course went over, which suggested a quadrillion synapses.

Quote:

The brain has roughly 10^11 (100 billion) neurons and 10^14 (100 trillion) synapses. The average firing rate for an excited neuron in an active microcolumn is around 200 Hz. The discrepancy comes from your ludicrous assumption that every neuron in the brain is firing at maximum rate all the time. In actual fact somewhere between 0.01% and 1% of neurons are firing at any given moment, which tallies with an estimated maximum aggregate sustained firing rate of 0.16 spikes/second/neuron based on observed metabolic efficiency and nutrient/oxygen demands in mammal brains. An aggregate sustained firing rate of 0.2/second equates to 20 billion neuron firing events and 20 trillion synpatic events per second, for a human brain.

Sorry, I was under the impression that the firing rate significantly higher (300 hertz versus 200 and a somewhat higher percentage), no problem in conceding that.

everyone horribly abuses FLOPs. It just gets even worse than usual when you talk about neural modelling. The figures I've used are for the kind of fairly conservative model I've seen proposed by current brain researchers (in computing terms a FLOP is usually a 64-bit multiply or add these days, hardware vendors like to quote 32-bit vector rates though frankly that's generally more than good enough for NN work, sometimes they try and count a fused multiply add as two operations in benchmarks).Xeriar wrote:err, I was being very rough due to the rather finicky nature of flops, but if you're going to do this you also need to consider what a given FLOP does, and how many bits the operation takes.

Incidentally, here's another illustration I just remembered. Here's what happens when you apply a simplistic '200 Hz neurons x 100,000,000,000,000 synapses' style rule to a reasonably modern processor.

The 2GHz Pentium 4 has around 50 million transistors and consumes about 80 watts to deliver up to 8 gigaFLOPS (for continuous stream single-precision SSE, actually more like 0.5 for non-vector branchy double-precision code but lets be generous). Gate switching energy for this speed and process (130nm) is about 3E-13 joules. If all of those transistors were configured as logic, that would be about 20 million gates. If they were all cycling continuously at the global clock rate this would give 4E16 logic operations (which if we were being very naive might say was equivalent to 5E14 FLOPS, assuming 70 logic ops for a 32 bit add) per second.

So looking at the P4 the way you proposed looking at the brain, we'd say that it gets half a petaFLOP. Add at least an additional order of magnitude if you use the theoretical gate switching rate (based on the settle time) rather than the actual effective frequency when grouped into clocked stages. But wait, based on our measurements of power draw for an isolated gate, that implies a power consumption of 12,000 watts, or 120kw for the max theoretical rate! How is this possible? It must be magic!

In actual fact only half a percent or so of the transistors are active at any one time and it takes more like 10^4 gates to implement a logic operation; and the actual execution logic takes up only a small fraction of the chip, compared to storage and management overhead.

Similarly looking at the brain the peak theoretical rate for a neuron may be 300 Hz, but the actual rate averaged across the small active set and the much larger set of currently inactive neurons is more like 0.2 Hz. That doesn't even begin to look at how much lower the effective computing power is due to massive redundancy and horrible (post-noise) precision, the neurons that contribute only the equivalent of computer memory operations, the brain's equivalent of 'management overhead' or the huge amounts of waste caused by the fact that speech areas are only good for speech, vision only for vision etc even when you're actually trying to compose a jingle or solve a calculus problem. On a digital computer most of the computing power is available all the time for whatever problem we chose to devote it to. But really those are all arguments for why brains suck compared to AIs (only a small selection from them in fact). For the purposes of reasonably accurate brain simulation, we have to assume we need to simulate every synapse event and depolarisation wave propagation through dendrite trees in a fair degree of detail, though in the long term we may well find ways to near-losslessly lower the detail level for most of the simulated brain.

- Starglider

- Miles Dyson

- Posts: 8709

- Joined: 2007-04-05 09:44pm

- Location: Isle of Dogs

- Contact:

If you're referring to the thermal noise floor on power consumption per gate in semiconductor circuits, this does not apply to non-semiconductor substrates such as rapid single flux quantum, which is currently at 10^-19 Joules/binary op at an effective clock rate of 50-100 GHz. Unfortunately AFAIK no one has managed to build an entire processor out of this technology yet due to immature fabrication technology, though functioning logic blocks have been made. The zettaFLOPS reference is from a paper I once read (it was posted to a mailing list I was on) on the performance of a (hypothetical) nanotube based terahertz RSFQ processor. Unfortunately I've spent the last 10 minutes trying to find it via google and I can't, so I can't back up a claim of more than 1E-19 J/op for electronic processing right now. I'll ask about it next time I talk to a nanocomputing person and get back to you.Xeriar wrote:The above is a bit moot and I'm more referring to your zettaflop per watt statement, as this means a complete operation has to occur in a hundred or so bit changes.

- Coyote

- Rabid Monkey

- Posts: 12464

- Joined: 2002-08-23 01:20am

- Location: The glorious Sun-Barge! Isis, Isis, Ra,Ra,Ra!

- Contact:

Up until just a few weeks ago, I thought "singularity" meant a black hole point, also. I was confused to hear it in reference to a discussion about artificial intelligence and had to catch on from context that it meant, in that particular case, it was used to describe that point in time when AI truly becomes self-aware and intelligent. At that point, I figured it was a pretty decent way to sum up what would certainly be an event of major, life-changing importance to all. One of those "things will never be the same after this" and "we'll look at time two ways: before this event, and after it".

I then heard the same word used to refer to breaking the light barrier, and realized the term was being applied to anything that would be so universally and fundamentally changing in scope of our understanding of the universe and what we could do in that universe. Again, I thought, Okay, that makes sense. A major, sweeping, all encompasing thing, the discovery of which will cause an immediate re-evaluation of scientific principles. Unlike slow, creeping, morphing advancements such as, say, the Internet or hybrid cars.

But more & more I hear the term being applied to things like the Internet and I realize that "singularity" is catching on as a buzzword, and will soon mean anything that is neat or cool, or relates to a personal revelation or a catharsis event: "I went to a New Age crystal-fetishest meditation revival, and it was a mind-blowing and eye-opening event for me. A real personal singularity".

Oy, vey.

I then heard the same word used to refer to breaking the light barrier, and realized the term was being applied to anything that would be so universally and fundamentally changing in scope of our understanding of the universe and what we could do in that universe. Again, I thought, Okay, that makes sense. A major, sweeping, all encompasing thing, the discovery of which will cause an immediate re-evaluation of scientific principles. Unlike slow, creeping, morphing advancements such as, say, the Internet or hybrid cars.

But more & more I hear the term being applied to things like the Internet and I realize that "singularity" is catching on as a buzzword, and will soon mean anything that is neat or cool, or relates to a personal revelation or a catharsis event: "I went to a New Age crystal-fetishest meditation revival, and it was a mind-blowing and eye-opening event for me. A real personal singularity".

Oy, vey.

Something about Libertarianism always bothered me. Then one day, I realized what it was:

Libertarian philosophy can be boiled down to the phrase, "Work Will Make You Free."

In Libertarianism, there is no Government, so the Bosses are free to exploit the Workers.

In Communism, there is no Government, so the Workers are free to exploit the Bosses.

So in Libertarianism, man exploits man, but in Communism, its the other way around!

If all you want to do is have some harmless, mindless fun, go H3RE INST3ADZ0RZ!!

Grrr! Fight my Brute, you pansy!

Libertarian philosophy can be boiled down to the phrase, "Work Will Make You Free."

In Libertarianism, there is no Government, so the Bosses are free to exploit the Workers.

In Communism, there is no Government, so the Workers are free to exploit the Bosses.

So in Libertarianism, man exploits man, but in Communism, its the other way around!

If all you want to do is have some harmless, mindless fun, go H3RE INST3ADZ0RZ!!

Grrr! Fight my Brute, you pansy!

- Starglider

- Miles Dyson

- Posts: 8709

- Joined: 2007-04-05 09:44pm

- Location: Isle of Dogs

- Contact:

Vinge's original use of it, and frankly as used by all the people I'd consider to have a clue, applies to the first creation of significantly transhuman intelligence (which includes basically all general AI). Significantly non-human but human-equivalent intelligence in large enough numbers will have the same prediction-defeating effect, but that's probably academic as we don't seem likely to uplift dolphins or create Blade Runner replicants any time soon.Coyote wrote:Up until just a few weeks ago, I thought "singularity" meant a black hole point, also. I was confused to hear it in reference to a discussion about artificial intelligence and had to catch on from context that it meant, in that particular case, it was used to describe that point in time when AI truly becomes self-aware and intelligent.

Must confess I've never heard that one.I then heard the same word used to refer to breaking the light barrier,

FTL isn't prediction-defeating (well, not unless it's also time travel). We can predict what we'd do with FTL pretty well; essentially what we're doing right now, just on a larger scale. We can look at past occasions when lots of new territory and resources suddenly opened up and draw reasonable analogies (in fact most space opera does just that, though often not very seriously). So yes whoever it was was just being a trendy muppet.and realized the term was being applied to anything that would be so universally and fundamentally changing in scope of our understanding of the universe and what we could do in that universe.

I'd go with 'disruptive technology'. Maybe even 'paradigm shift'. But basically if it doesn't involve transhuman intelligence, it isn't a singularity.A major, sweeping, all encompasing thing, the discovery of which will cause an immediate re-evaluation of scientific principles. Unlike slow, creeping, morphing advancements such as, say, the Internet or hybrid cars.

Yeah, well, if that happens we will need a new double-secret term for people with a clue. 'Blarp' could work. 'Watch out, the blarp is coming.' 'I'm proud to be a blarpian.' Hmm, maybe we need a marketing person for this one...But more & more I hear the term being applied to things like the Internet and I realize that "singularity" is catching on as a buzzword, and will soon mean anything that is neat or cool, or relates to a personal revelation or a catharsis event: "I went to a New Age crystal-fetishest meditation revival, and it was a mind-blowing and eye-opening event for me. A real personal singularity".

- Ariphaos

- Jedi Council Member

- Posts: 1739

- Joined: 2005-10-21 02:48am

- Location: Twin Cities, MN, USA

- Contact:

Why are you throwing out this strawman when I already conceded that point? I thought it was averaged faster, but not at full rate. I call this a strawman because the human brain is a unified memory/processing architecture, as opposed to a Von Neumann architecture. That is, in a brain, every synapse is available for activation at any time, this is not true in the case of a processor.So looking at the P4 the way you proposed looking at the brain, we'd say that it gets half a petaFLOP. Add at least an additional order of magnitude if you use the theoretical gate switching rate (based on the settle time) rather than the actual effective frequency when grouped into clocked stages. But wait, based on our measurements of power draw for an isolated gate, that implies a power consumption of 12,000 watts, or 120kw for the max theoretical rate! How is this possible? It must be magic!

I know that, but I think it's reasonable consider more recent results to supersede older ones unless we have significant reason to believe a given study is flawed, yes? I give vague numbers (few orders of magnitude) because of such uncertainty.Honestly no one knows for sure. People just slice up (or biopsy) brains, stick small regions under a microscope, count what they can see and multiply. The numbers vary greatly. A lot of samples show low synapse and even neuron density; these tend to get ignored as outliers as the paper I just linked noted. A quadrillion synapses is reasonable as a worst case estimate. At the current rate of computing progress, that's about a five year delay in human-upload-equivalence at any given power/speed.

I'm not making the claim that better-than-human machines won't exist, even for their power output. I just find claims of millions of times to be... more on the optimistic side. Right now networking serial processors to do the job simply isn't working by any reasonable stretch.

That is still four orders of magnitude below such efficiency (if that's per kelvin anyway). I don't really doubt you, but scaling this sort of thing up has always been fraught with problems. Ultimately you want to transmit some of the obscene amount of data you are producing - in a normal neural net, that's pretty much all of it. It's hard to imagine an entire chip built on such technology functioning properly outside of a superconducting scenario.If you're referring to the thermal noise floor on power consumption per gate in semiconductor circuits, this does not apply to non-semiconductor substrates such as rapid single flux quantum, which is currently at 10^-19 Joules/binary op at an effective clock rate of 50-100 GHz. Unfortunately AFAIK no one has managed to build an entire processor out of this technology yet due to immature fabrication technology, though functioning logic blocks have been made. The zettaFLOPS reference is from a paper I once read (it was posted to a mailing list I was on) on the performance of a (hypothetical) nanotube based terahertz RSFQ processor. Unfortunately I've spent the last 10 minutes trying to find it via google and I can't, so I can't back up a claim of more than 1E-19 J/op for electronic processing right now. I'll ask about it next time I talk to a nanocomputing person and get back to you.

- Starglider

- Miles Dyson

- Posts: 8709

- Joined: 2007-04-05 09:44pm

- Location: Isle of Dogs

- Contact:

Millions of times is a conservative claim for de novo AGIs - even without nanocomputing, we can both get this much more raw power than the brain simply by putting in much more energy, then make far more efficient use of it (see huge list of likely AI cognitive advantages that apply regardless of raw compute power).Xeriar wrote:I just find claims of millions of times to be... more on the optimistic side.

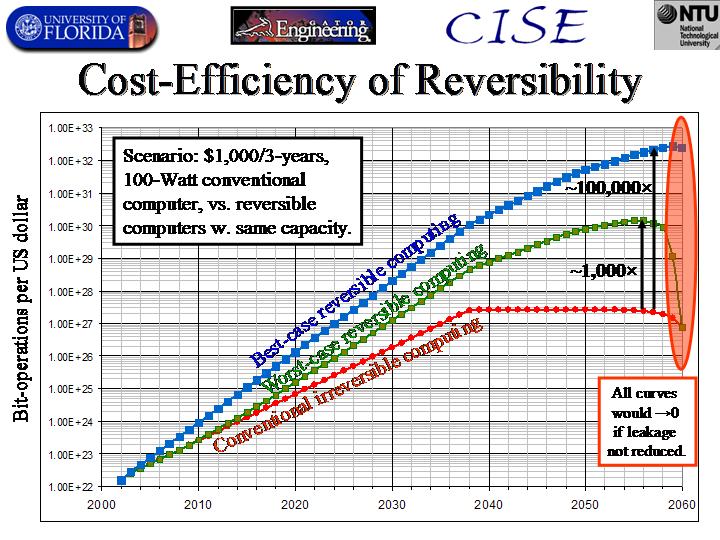

Oh, you're talking about the Landauer limit. Unlike semiconductor leakage issues that does apply to all computation (excepting some very exotic ideas such as black hole computation and maybe some forms of quantum computing), but it's only an upper bound on the power consumption of outputting bits, not on internal operations that generate that output. In other words the answer to the Landauer limit is reversible computing, the principles of which can be applied to conventional electronic computing, nanocomputing and most exotic substrates. I have seen reversible proposals for low power rod logic and RSFQ processors (including the zettaflops case study) - high-efficiency concept designs are generally they're asynchronous as well, both for power consumption and serial speed reasons. Unlike RSFQ, full-scale reversible (and asynchronous) processors have already been made; in fact here's a graph of how much difference it makes to power consumption:That is still four orders of magnitude below such efficiency (if that's per kelvin anyway).

The Landauer limit isn't really relevant for most kinds of computation, other limiting factors generally come into play well before the energy cost of transmitting output bits comes into play.

The amount of data transmitted out of the system is only the bandwidth of the motor nerves in the spinal cord (which is pretty pathetic in computing terms).Ultimately you want to transmit some of the obscene amount of data you are producing - in a normal neural net, that's pretty much all of it.

For RSFQ, it literally won't, since Josephson junctions only work on superconductors (unfortunately not hyperconductors AFAIK), but liquid nitrogen isn't that big of a deal. Frankly the silly nutrient requirements of trying to keep a brain alive in a vat would be much more trouble than a mere liquid nitrogen bath (though to be fair, current fabrication technology can't make RSFQ circuits out of high temperature superconductors - this isn't a physical limitation, it's a practical one). But this is just one of many candidate advanced computing substrates.It's hard to imagine an entire chip built on such technology functioning properly outside of a superconducting scenario.

- Ariphaos

- Jedi Council Member

- Posts: 1739

- Joined: 2005-10-21 02:48am

- Location: Twin Cities, MN, USA

- Contact:

Again, networking such cells to that level of thermal efficiency is going to require superconductors. I consider the cost of cooling to be a part of the total energy cost of a system. It will also affect mobility, dependencies, limitations,etc. that a potential transhuman may not wish to be saddled with.Starglider wrote:Millions of times is a conservative claim for de novo AGIs - even without nanocomputing, we can both get this much more raw power than the brain simply by putting in much more energy, then make far more efficient use of it (see huge list of likely AI cognitive advantages that apply regardless of raw compute power).

Landauer's principle - that's it. I forget the name, sorry.Oh, you're talking about the Landauer limit. Unlike semiconductor leakage issues that does apply to all computation (excepting some very exotic ideas such as black hole computation and maybe some forms of quantum computing)

Ooh nifty, I hadn't heard about this, thanks.but it's only an upper bound on the power consumption of outputting bits, not on internal operations that generate that output. In other words the answer to the Landauer limit is reversible computing, the principles of which can be applied to conventional electronic computing, nanocomputing and most exotic substrates. I have seen reversible proposals for low power rod logic and RSFQ processors (including the zettaflops case study) - high-efficiency concept designs are generally they're asynchronous as well, both for power consumption and serial speed reasons. Unlike RSFQ, full-scale reversible (and asynchronous) processors have already been made; in fact here's a graph of how much difference it makes to power consumption:

Err, it's the minimum thermal entropy generated by irreversible bit transformations, which may not always be considered output bits, at least, the way you're talking, you are acting as if the output bits of an entire system are the only ones that are counted. This -can- occur (holographic principle), but it usually doesn't.The Landauer limit isn't really relevant for most kinds of computation, other limiting factors generally come into play well before the energy cost of transmitting output bits comes into play.

I was talking about in-system communication.The amount of data transmitted out of the system is only the bandwidth of the motor nerves in the spinal cord (which is pretty pathetic in computing terms).

The nutrient bath for the human brain provides plasticity and regeneration, so calling it silly outright is a bit misleading. Those are valuable traits for a sentience, at least to me.For RSFQ, it literally won't, since Josephson junctions only work on superconductors (unfortunately not hyperconductors AFAIK), but liquid nitrogen isn't that big of a deal. Frankly the silly nutrient requirements of trying to keep a brain alive in a vat would be much more trouble than a mere liquid nitrogen bath (though to be fair, current fabrication technology can't make RSFQ circuits out of high temperature superconductors - this isn't a physical limitation, it's a practical one). But this is just one of many candidate advanced computing substrates.