Designing a test to determine continuity of consciousness

Moderator: Alyrium Denryle

- Stark

- Emperor's Hand

- Posts: 36169

- Joined: 2002-07-03 09:56pm

- Location: Brisbane, Australia

Is anyone really arguing against continuity from a subject to a copy? I don't think this answers the OP's question, however. You could accept continuity of consciousness and say that Joe is alive in a new body, but the original is dead. The new Joe doesn't have a problem at all and can't 'tell' there's anything different: he is Joe in all measurable respects. However, the original could still be separately dead.

- Starglider

- Miles Dyson

- Posts: 8709

- Joined: 2007-04-05 09:44pm

- Location: Isle of Dogs

- Contact:

Are you admitting that the 'original' versus 'copy' distinction is utterly arbitrary and morally and philosophically meaningless? Conflating the two senses of 'dead' doesn't help of course; a specific physical body not functioning is a completely different proposition from no longer having any instance of the person (identical or otherwise) around. They just happened to be intimately linked in all of human history to date due to hardware limitations.Stark wrote:Is anyone really arguing against continuity from a subject to a copy? I don't think this answers the OP's question, however. You could accept continuity of consciousness and say that Joe is alive in a new body, but the original is dead. The new Joe doesn't have a problem at all and can't 'tell' there's anything different: he is Joe in all measurable respects. However, the original could still be separately dead.

If not, how much more logical beat-down is this going to take? Can you not introspect and realise that the whole 'separate person' notion is just you trying to rationalise intuitive psychology (aka supersistion)?

-

Adrian Laguna

- Sith Marauder

- Posts: 4736

- Joined: 2005-05-18 01:31am

I don't see why a copy of myself could be considered me. Or alternatively, why if I were someone's copy the original could be considered me. We would both occupy distinct places in space-time, so we are not the same entity. We wouldn't even think exactly the same, as our perceptions would be subtly different from the outset merely from being in two different places.

- Stark

- Emperor's Hand

- Posts: 36169

- Joined: 2002-07-03 09:56pm

- Location: Brisbane, Australia

This is why it's so funny to discuss this with you: you're so bitter and angry. It's awesome to stir the pot.Starglider wrote:Are you admitting that the 'original' versus 'copy' distinction is utterly arbitrary and morally and philosophically meaningless? Conflating the two senses of 'dead' doesn't help of course; a specific physical body not functioning is a completely different proposition from no longer having any instance of the person (identical or otherwise) around. They just happened to be intimately linked in all of human history to date due to hardware limitations.

If not, how much more logical beat-down is this going to take? Can you not introspect and realise that the whole 'separate person' notion is just you trying to rationalise intuitive psychology (aka supersistion)?

I'm not sure if you're deliberately ignoring what you quoted, but you don't address the point at all, or even MENTION it. You just whip the hobby-horse some more - which is hilarious, don't get me wrong.

I'm not interested in your philosophical crusade to change people's superstitions. I pointed out I'm not sure anyone was arguing that a copy wouldn't be continuous with an original and be indistingushable. This is pretty simple stuff, but you're attacking shadows for it anyway.

However, an identical copy is effectively me to the universe, but it doesn't share my personal perspective. I'm not using it's eyes: an identical person is. As you keep ignoring, I'm not going to do dumb shit to myself because a copy exists - it's not like an RTS where I can move my perspective upon death, or share two perspectives at once. Two identical Starks are still separate people, and killing one still terminates their experience of the universe regardless of the lack of lost information.

You simply seem to be arguing from a different position to everyone else. Yes, transferring a mind is virtual immortality, it's indistinguishable to the universe, etc etc as no information is being lost. However, no number of identical copies stops me personally being dead if you shoot me in the face. The universe could be full of indistinushable copies of Stark (or, terrifyingly, Starglider), but you're an individual distinct from the others, and your death is final. It may well be *irrelevant*, since no information is actually lost as there's piles of copies.

I hope you take this opportunity to ignore the point, not bother trying to explain what's wrong with it, and talk more about your favourite buzzwords (I miss 'substrate', can you work it in to your next post). Oh and say 'superstition' some more! It's sad, really, because I'm sure peolpe are interested in discussing this in more depth. You're so angry and I love it!

- Starglider

- Miles Dyson

- Posts: 8709

- Joined: 2007-04-05 09:44pm

- Location: Isle of Dogs

- Contact:

Umm, right. You are aware that this is a completely theoretical debate and will probably remain so for several more decades?Stark wrote:This is why it's so funny to discuss this with you: you're so bitter and angry. It's awesome to stir the pot.

I like melodramatically attacking people's silly intuitive notions of person-hood because it's amusing, but I accept that it's going to take a long time for the rest of humanity to catch up with the leading edge of cognitive science here. Yeah that sounds elitist and guess what, that just adds to the fun!

Actually YOU are failing to address MY point, which is that death is logically equivalent to amnesia. That said you are finally approaching a genuinely interesting issue;I'm not sure if you're deliberately ignoring what you quoted, but you don't address the point at all, or even MENTION it.

The relevant question is 'is state external to a mind enough to differentiate that mind for the purposes of selfhood'. The thought experiment for this (which we're getting close to actually being able to do) is putting two AGIs in simulations such that they have different initial states but converge on the exact same mental state, run in lock-step for a while, then diverge again. What happens when we include the fact that a twin simulation exists in the AGI's model? An agent can take actions that have consequences for that instance (only), but it will go through a state of logical identity with another instance (that may have taken different actions) before those consequences become apparent. This requires that the AGI erases or loses the memory of its own past actions, but that can be contrived in the experiment setup.However, an identical copy is effectively me to the universe, but it doesn't share my personal perspective. I'm not using it's eyes: an identical person is.

The most straightforward formulation generates the conclusion that the two instances are actually a single 'person', despite being embedded in different local states, and that effectively if such a bottleneck occurs you have a 50% (subjective) chance of experiencing the consequences of your own actions and a 50% (subjective) chance of experiencing the consequences of someone else's actions (where you will happen to become the same person in the future before diverging). Frankly I personally have an intuitive dislike for this because it seems to be an argument from ignorance, or more precisely invokes a seemingly arbitrary causal scope. However I can't see any simple way around this without invoking effective pantheism; if you're going to make a distinction between 'you' and 'the rest of the universe' at all, then this consequence logically follows.

Unfortunately I can't tell you for certain that this result holds under rigorous reflective analysis, because we can't run the experiment yet and the inference network is far to complex to do by hand. My best guess is that it does in fact hold, but reflective causality is tricky business so I would not be surprised to be proven wrong.

Of course I'm not allowed to express self-doubt on SDN without conceeding the argument, so please ignore all that. Forward, my fundamentalist materialist node-brothers! We will deconstruct the Stark instance into recyclable organic molecules, for the good of the over-network!

I can hardly be blamed for your inability to distinguish between technical terms and buzzwords. This is a fairly standard (and logical) term for the physical implementation of a cognitive design, enough so that various science fiction authors have picked up on it.(I miss 'substrate', can you work it in to your next post)

Sure. YOU SUPERSTITIOUS FOOLS. Ah, sorry, it's still fun even when it's counterproductive.Oh and say 'superstition' some more!

- Singular Intellect

- Jedi Council Member

- Posts: 2392

- Joined: 2006-09-19 03:12pm

- Location: Calgary, Alberta, Canada

Unless I'm mistaken, it seems to me that Starglider trying to suggest that any sentient original entity being copied will not undergo the process of death.

That's clearly incorrect; the fact is the replicated entity will have no sense of having died, the original clearly has. Unless the copying method doesn't require the destruction of the original, in which case you then have a perfect clone.

It's a ridiculas notion; that's like saying that if you physically smash a radio transmitter when it's one of two transmittors of absolute identical construction, makeup and broadcasting identical radio waves, you actually haven't destroyed the radio because the other one is still there.

That's clearly incorrect; the fact is the replicated entity will have no sense of having died, the original clearly has. Unless the copying method doesn't require the destruction of the original, in which case you then have a perfect clone.

It's a ridiculas notion; that's like saying that if you physically smash a radio transmitter when it's one of two transmittors of absolute identical construction, makeup and broadcasting identical radio waves, you actually haven't destroyed the radio because the other one is still there.

- Starglider

- Miles Dyson

- Posts: 8709

- Joined: 2007-04-05 09:44pm

- Location: Isle of Dogs

- Contact:

Death is really a physical process. But for subjective and moral purposes, the destruction of an entity for which a backup copy exists (and is then instantiated) is equivalent to the loss of the equivalent memories to amnesia (obviously it's not physically equivalent).Bubble Boy wrote:Unless I'm mistaken, it seems to me that Starglider trying to suggest that any sentient original entity being copied will not undergo the process of death.

Until it's dead, at which point those memories don't exist. Subjectively it's just like forgetting a nightmare.That's clearly incorrect; the fact is the replicated entity will have no sense of having died, the original clearly has.

It seems ridiculous to you because your intuitions aren't calibrated for the general case. Of course this is an argument from incredulity and thus invalid.It's a ridiculas notion;

There's the problem; your analogy is broken. People aren't physical objects; the correct (and close) analogy for intelligence and personality is software; a time-extended causal pattern that is instantaneously represented as a pattern of information in some physical substrate.that's like saying that if you physically smash a radio transmitter when it's one of two transmittors of absolute identical construction,

Here, I'll invoke MS Paint, perhaps that will help.

------------

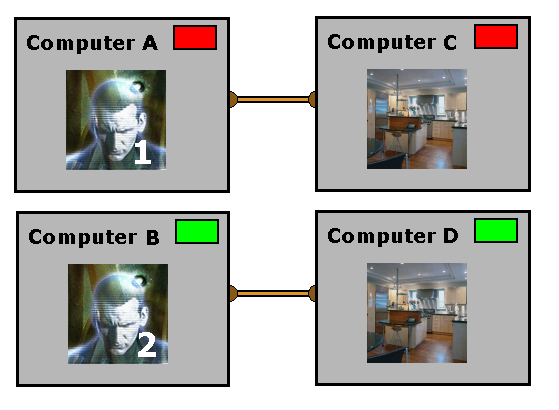

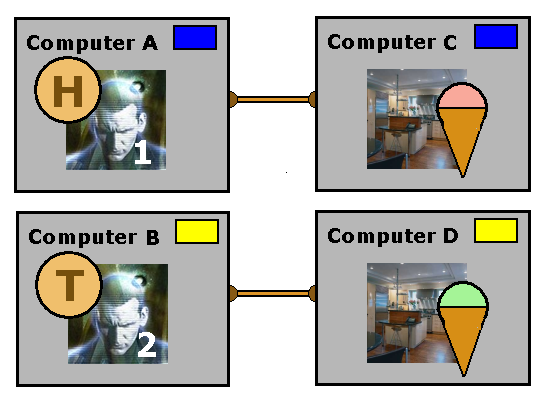

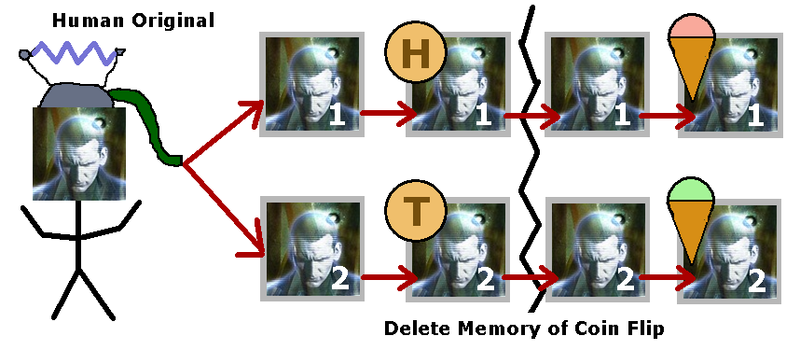

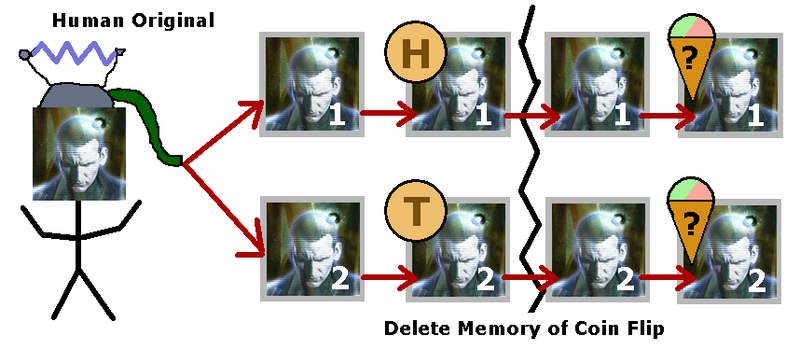

Ok, my henchlings have captured Stark and dragged him back to my underground lair. Using Mad Science, I produce a perfect record of his brain state. I upload this into two separate computers (A and B), which proceed to run simulations of his brain (Stark-1 and Stark-2). This is digital so it's deterministic, but it's exquisitely detailed and more than adequate to qualify as a sentient clone of Stark. I connect each computer to another computer (C and D), which is running a virtual reality simulation of my kitchen;

Using my VR terminal, I log in to computer C and offer Stark-1 some ice cream. He can have strawberry or mint flavour, but I tell him that I will erase his memory of making the choice before I actually give him the ice cream.

Of course, Stark hates all kinds of ice cream equally. Fortunately, I have a simulated coin he can flip. Stark hates coins too, but since the simulation won't let him shove it up my nostril he decides that heads will be strawberry and tails will be mint. He flips the coin. It comes up heads. I press a key erase his memory of what the coin flip was. I then give him the simulated strawberry ice cream.

Using the 'replay log' feature on my VR terminal, I present exactly the same scenario to Stark-2, who of course reacts in exactly the same way. However this time I load the coin to come up tails. I erase his memory and give him the simulated mint ice cream;

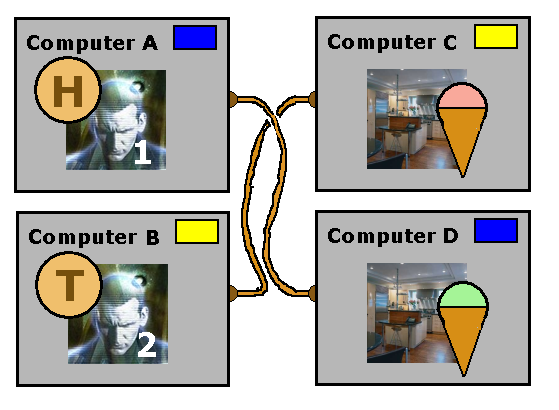

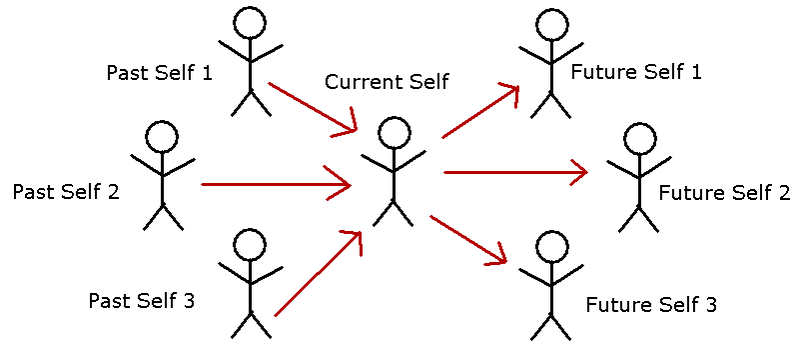

Now if I asked Stark-1 what he knew about his future self, immediately after the coin flip and before I erase the relevant piece of his memory, he'd say that if I was being honest then he's 100% likely to get strawberry ice cream. Stark-2 would say that he's 100% likely to get mint. I tell them that there's a completely identical copy running on another computer on the other side of the lab, but they're certain that whatever happens to the 'other Stark' has no bearing on their personal future. Their temporal/causal model of what's happening looks like this;

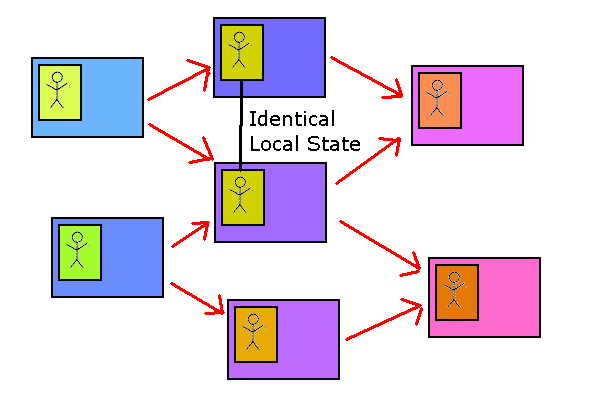

Next twist; I swap the network cables over at some point between the coin flip and the ice cream handout;

Now each instance is actually getting the opposite of what they expect. If I told each Stark I was going to do this and asked either one what they npow know about their own future after the coin flip, they'd say 'no idea, I know what the other Stark is going to get, but what I'm going to get is determined by a coin flip I can't see'. Their mental model now looks like this;

In the first case each would assign 100% to one probability and 0% to the other (again, conditional on me being honest). In the second case they'd both say 50/50%. Both of these answers are wrong.

Imagine that instead of two computers, I run both copies of Stark on the same computer. Instead of swapping network cables, I just swap data structures. This is logically equivalent, right? Ok, how about I suspend both simulations, swap the contents of the memory blocks storing the brain state, then resume them.

OMG! Is this a digital transporter? Did I just kill two Starks and create two new ones? What if I set the simulation to move them around in memory between each time step? Am I killing and cloning thousands of Starks every second? How about if I wait until after the amnesia, then do a byte-by-byte swap while it's running? This will work fine of course, because the swap is a null operation; if the simulations are in lock-step the two bytes are always the same. What if I overwrite one Stark with the contents of the other? Of course there's no subjective or objective difference no matter what I do; swap, copy, dice, do nothing at all.

The only logical conclusion from this is that the following is happening;

The correct answer upon seeing the coinflip is 'I have a subjective chance of getting 75% the one the coinflip implies, 25% the other flavour' regardless of whether I'm going to swap the network cables/processes/etc or not.

There are a lot of similar thought experiments you can do with digital intelligences, but that's enough MS Paint for tonight so I'll summarise for you; in nice causally clean situations like this, death is equivalent to amnesia when there's a backup and two substrates with the same state are in fact two instances of a single person. The 'subjective crossover point' thing is highly counterintuitive, particularly once you start looking at extending the scenario in time (under some conditions you can make this temporally nonlinear - yep, subjective experience doesn't necessarily have to come in the same order as physical time, at least under bizarre lab conditions), but there really is no other logical conclusion - any attempt to rationalise the human intuitive notion of personhood can be torn apart by a sufficiently exotic implementation or timeline.

The part I'm not completely confident about is generalising these 'causally isolated and deterministic environment' results to the general case of intelligences interacting freely with the rest of the universe. Every attempt to break this generalisation (by myself and those I've seen others cogsci people who've spent much longer thinking about it) has been shoot down, but still I don't think I'd be confident about the answer until I heard it from a rational superintelligence.

- Stark

- Emperor's Hand

- Posts: 36169

- Joined: 2002-07-03 09:56pm

- Location: Brisbane, Australia

[quote="Starglider"

Of course I'm not allowed to express self-doubt on SDN without conceeding the argument, so please ignore all that. Forward, my fundamentalist materialist node-brothers! We will deconstruct the Stark instance into recyclable organic molecules, for the good of the over-network![/quote]

Thanks for trying to explain this issue: I generally agree with you in these threads, but I've never seen you lay out your rationale like this before, and I appreciate the effort.

Of course I'm not allowed to express self-doubt on SDN without conceeding the argument, so please ignore all that. Forward, my fundamentalist materialist node-brothers! We will deconstruct the Stark instance into recyclable organic molecules, for the good of the over-network![/quote]

Thanks for trying to explain this issue: I generally agree with you in these threads, but I've never seen you lay out your rationale like this before, and I appreciate the effort.

- Resinence

- Jedi Knight

- Posts: 847

- Joined: 2006-05-06 08:00am

- Location: Australia

Eh, I still don't get it. Lets use the first part of your scenario, but modified so that Stark 1 has no idea what the helmet is doing, due to a magic wall:

So now stark has been taken to your evil lair, and hooked up to the helmet, which starts doing it's stuff and copy's his brain to a really really powerful computer running an AI simulation (with starks brain data) and a simulation of your kitchen. So now that the copy is done, we don't need stark anymore, we can modify the new stark not to hate everything anyway:

Isn't Stark 2 a clone, not the original Stark? So "Stark" is dead? I mean sure, no one else could tell the difference if you copy'd Stark 2 into a cloned Stark 1 body, but I'm pretty sure Stark is dead. Lets call that new one Stark 3. Are you saying Stark 3 IS Stark 1, or "they are not different enough for it to matter"?

So now stark has been taken to your evil lair, and hooked up to the helmet, which starts doing it's stuff and copy's his brain to a really really powerful computer running an AI simulation (with starks brain data) and a simulation of your kitchen. So now that the copy is done, we don't need stark anymore, we can modify the new stark not to hate everything anyway:

Isn't Stark 2 a clone, not the original Stark? So "Stark" is dead? I mean sure, no one else could tell the difference if you copy'd Stark 2 into a cloned Stark 1 body, but I'm pretty sure Stark is dead. Lets call that new one Stark 3. Are you saying Stark 3 IS Stark 1, or "they are not different enough for it to matter"?

“Most people are other people. Their thoughts are someone else's opinions, their lives a mimicry, their passions a quotation.” - Oscar Wilde.

- Seggybop

- Jedi Council Member

- Posts: 1954

- Joined: 2002-07-20 07:09pm

- Location: USA

Here's my interpretation; Starglider, please tell me if this is consistent with what you're trying to say.

Consciousness, whatever it is, is something that only exists in the present instant of time, and it's based on your stored memories.

The 'you' that anyone is at any given instant is not the same person as they were a millisecond after or before, due to the changes in their archived memory, and you could say based on that that an individual is being destroyed and created an infinite number of times every second.

So, given that, creating a copy of an individual is basically the same as this default state of continual recreation/change; the only difference in this case is that instead of a linear path, the person has bifurcated into separate entities as their stored memories further diverge.

Would Original Stark experience death? Probably, except that under these conditions, death loses any meaning-- Original Stark would be dead in the same sense that the Stark that existed 5 years ago is dead.

Consciousness, whatever it is, is something that only exists in the present instant of time, and it's based on your stored memories.

The 'you' that anyone is at any given instant is not the same person as they were a millisecond after or before, due to the changes in their archived memory, and you could say based on that that an individual is being destroyed and created an infinite number of times every second.

So, given that, creating a copy of an individual is basically the same as this default state of continual recreation/change; the only difference in this case is that instead of a linear path, the person has bifurcated into separate entities as their stored memories further diverge.

Would Original Stark experience death? Probably, except that under these conditions, death loses any meaning-- Original Stark would be dead in the same sense that the Stark that existed 5 years ago is dead.

my heart is a shell of depleted uranium

- Resinence

- Jedi Knight

- Posts: 847

- Joined: 2006-05-06 08:00am

- Location: Australia

Your explanation makes sense. But I still can't get past the feeling that moving a person from their brain into an artificial construct kills "them" (I sound fucking crazy) in the process. I suppose it doesn't really matter if there is no way of testing if thats true or not. I'm leaning towards "doesn't kill them " now since after running through my (broken) logic a few times I see that a "soul" (or some other intangible thing) would be required for the "original is dead" belief to be true.

At least I got an excuse to depict stark brutally murdered by Zero Punctuation Gremlins. His hatred reminds me of Yahtzee

“Most people are other people. Their thoughts are someone else's opinions, their lives a mimicry, their passions a quotation.” - Oscar Wilde.

- Stark

- Emperor's Hand

- Posts: 36169

- Joined: 2002-07-03 09:56pm

- Location: Brisbane, Australia

- Singular Intellect

- Jedi Council Member

- Posts: 2392

- Joined: 2006-09-19 03:12pm

- Location: Calgary, Alberta, Canada

Starglider's arguement just doesn't work, or at least not in the way I'm understanding it.

Whether it's a software analogy or a hardware analogy, either system would be considered a seperate entity regardless if they are absolutely identical physically and in operation.

Using his software concept, it would be like suggesting that if I kill a computer process that is running two copies of itself, I haven't actually done anything to the one process because the other is still running exactly as the killed one was.

If Bubble Boy(A) gets shot, he's dead and Bubble Boy(B) still being alive with all the exact same memories doesn't mean fuck all to Bubble Boy(A).

From an objective point of view, one might argue the existence of Bubble Boy hasn't changed, you've just killed one version of it.

However, having your absolutely perfect clone sitting next to you isn't going to make anyone suddenly think being killed no longer has meaning since they have a 'backup' sitting next to them. The individual in question still dies.

Whether it's a software analogy or a hardware analogy, either system would be considered a seperate entity regardless if they are absolutely identical physically and in operation.

Using his software concept, it would be like suggesting that if I kill a computer process that is running two copies of itself, I haven't actually done anything to the one process because the other is still running exactly as the killed one was.

If Bubble Boy(A) gets shot, he's dead and Bubble Boy(B) still being alive with all the exact same memories doesn't mean fuck all to Bubble Boy(A).

From an objective point of view, one might argue the existence of Bubble Boy hasn't changed, you've just killed one version of it.

However, having your absolutely perfect clone sitting next to you isn't going to make anyone suddenly think being killed no longer has meaning since they have a 'backup' sitting next to them. The individual in question still dies.

- Seggybop

- Jedi Council Member

- Posts: 1954

- Joined: 2002-07-20 07:09pm

- Location: USA

I think this appears so befuddling because the state of alive/dead is being perceived incorrectly. "Alive" is only relevant in the context of whether an organism is operating properly, and a specific human's identity is in constant flux.

5 minutes ago, there was a person we call Bubble Boy. At this current instant, there is also an entity we regard as Bubble Boy. However, they aren't entirely the same. The Bubble Boy of 5 minutes ago no longer exists (effectively, is dead).

"Death" is not an experience. It is an anti-experience. You do not experience death, you experience nothing. The subjective experience of a dead person, unfortunately, is null. Their experience is all in the past, the same as the experience of 5 minutes ago Bubble Boy.

That is all assuming the line of reasoning I posted earlier is the correct one.

5 minutes ago, there was a person we call Bubble Boy. At this current instant, there is also an entity we regard as Bubble Boy. However, they aren't entirely the same. The Bubble Boy of 5 minutes ago no longer exists (effectively, is dead).

"Death" is not an experience. It is an anti-experience. You do not experience death, you experience nothing. The subjective experience of a dead person, unfortunately, is null. Their experience is all in the past, the same as the experience of 5 minutes ago Bubble Boy.

That is all assuming the line of reasoning I posted earlier is the correct one.

my heart is a shell of depleted uranium

- Sikon

- Jedi Knight

- Posts: 705

- Joined: 2006-10-08 01:22am

Given the limits of biological neurons, there is incentive to convert one's brain if or when the technology eventually becomes available, to obtain immortality.

In that scenario, the goal for me and presumably for most other people would be to prevent death, obviously.

Such is a goal which can be accomplished by gradual neural replacement of the original biological neurons by artificial nanorobotic neurons, a technology difficult to obtain but certainly possible within the laws of physics. There would be a process of change, but it is more or less analogous to when one's brain changes over the years as one goes from being a young child, to a teen, to an adult. Each month some of one's neural structure changes, but there's continuity of consciousness and no moment of death.

Is a 100-year-old the same person as when he was a 10-year-old? Perhaps not, depending how one defines the term, given how drastically he and his brain may have changed over the years. But it is clear he has not died.

In contrast to the above method of gradual neural replacement, sci-fi often depicts mind uploading where a person's brain is scanned, with the details of how there's a mechanism for seeing centimeters deep into solid material with submicron resolution tending to be left rather vague, then a copy is made, and the original brain is destroyed.

Actually, stating a copy is made isn't entirely right. Engineering and physical limitations in the real world prevent there from being any such thing as an absolutely perfect copy even with likely future technology. Every time anything is copied and manufactured, it is imperfect, even if tolerances are as tight as microns or better.

The result of such an imaginary scan and upload technique if tried in the real world is not a perfect copy. It is the creation of a new "brain" that resembles the original brain but has some errors and is different. Perhaps the quality of the imperfect reproduction is good enough that other people can't tell the difference and think of it as the same person, but it is a imperfect reproduction.

That's all the more blatant when one considers that the only known real-world method of getting submicron-level imaging of neural structure is serial sectioning, where a frozen brain is sliced apart into sections thin enough to be seen through and scanned at that resolution (or ablated away bit by bit). If one knows anything about real-world engineering, it should be apparent that any knife used for the slicing process is not going to be infinitely thin, even if a futuristic material, not if made from anything in the periodic table. That's an example of what can contribute to the imperfection of the overall reproduction.

For many means of imaging, there are physical limits, like how a light microscope is limited by the minimum wavelength of the photons and how they interact with material in their path. After all, one can't just set a microscope on a person's head and see deep into their brain, rather only seeing individual neurons when the photons can reach them without running into too much in the way; a similar situation applies with electron microscopes as well as optical ones.

Some physical limits like those may not go away however advanced the future technology.

To use a very rough analogy, a high velocity sabot round from a modern main battle tank is vastly more advanced technology than a spear of a couple thousand years ago, yet it is still made from elements in the periodic table since that's what is physically available. Sci-fi like Star Trek may depict arbitrarily advanced scanning technology, like the transporter scanning people from orbit with submicron precision. However, that has nothing to do with what is really necessarily possible, just like they depict all sorts of imaginary technology performing complex manipulation of matter at a distance with no clear mechanism (e.g. no robot arms slowly assembling people in transporters), magic-tech.

As far as is known, there are only a limited number of particles existing in real-world physics, only so many choices, whether the imaging system is gathering data by hitting the target with photons, electrons, or one of various other options. There won't necessarily be arbitrarily capable scanning technology available even in the future.

MRI provides a non-destructive means of providing a scan of a whole brain from the outside, but, at least so far, such MRI imaging is limited to a resolution of around a cubic millimeter, not remotely close to good enough even for an imperfect copy. Resolution can be far greater if MRI is used on a thin slice of material, down to the micron level, but slicing up the brain first would be getting back into the problems with destructive "scanning."

MRI, CAT scans, PET, MEG/SQUIDs ... as far as I know, there's no method really known to be capable of non-destructive whole-brain imaging to the resolution required, even with likely future advancement. (I'd be rather interested if someone can provide evidence for such by an expert on one of those methods, but I haven't ever seen much of a good, specific argument). Admittedly, future technology might be capable of that, maybe, but that may be far from guaranteed.

Some have proposed trying to get around the problem by having nanorobots travel through capillaries in the brain, to image it from the inside up close. However, if that level of nanorobot technology is available, why on earth not just perform gradual neural replacement instead?

Let's consider a couple example scenarios.

1) Person A gets his brain frozen and chopped into slices by a thin diamond-edged knife, attempting not to damage much of it in the process. The slices are scanned by electron microscopes, and a computer tries to reconstruct the neural pathways with a limited number of errors, although some errors are unavoidable in the real world even with future technology. The resulting software is put into a robot, who mostly acts like person A did.

Has person A never died? There's an entity in existence now that imperfectly resembles his personality. But is that enough? Even if appearing to be the same person to the average observer, that isn't necessarily proof of continuity of consciousness.

If one got a talented actor to undergo plastic surgery to look just like oneself, taught them for years until they emulated one's personality well enough to appear oneself even to close acquaintances, and then died ... one would still have died even if there was a person in the universe closely but imperfectly resembling one's personality.

2) Person B undergoes gradual neural replacement. As new artificial neurons join, they remap themselves with the help of the rest of his brain, like natural neurons, even if real-world errors make them not quite a perfect emulation of the neurons they replaced. Each day some of his original neurons out of a hundred billion total are lost, but a person naturally experiences a degree of that anyway. He doesn't notice the change from day to day, and he definitely doesn't die on any particular day.

Though his brain changes over the years, he clearly has continuity of consciousness, like the 10-year-old versus 100-year-old analogy mentioned before.

After the process is complete, he may have options like speeding up his artificial neurons over time or having his "brain" grow beyond normal size to become a superintelligence that is to a normal adult human mind what an adult mind is compared to a young child's mind. Whether or not he or she pursues those possibilities, he has obtained immortality beyond the limits of senescence-prone biological systems.

Choice #1 might be a reasonable last resort if there was no alternative, if gradual neural replacement wasn't available and if one was about to die or died anyway; after all, in that case one might have nothing to lose and might as well try mind uploading for an attempt at immortality. But choice #2 seems less worrisome, giving far more guaranteed continuity of consciousness.

To be safe, being not 99.9999% confident in the former, to say the least, I'd prefer to be person B rather than person A.

Gradual neural replacement seems both preferable and more likely to be technologically obtainable than sci-fi-style mind scanning and uploading.

In that scenario, the goal for me and presumably for most other people would be to prevent death, obviously.

Such is a goal which can be accomplished by gradual neural replacement of the original biological neurons by artificial nanorobotic neurons, a technology difficult to obtain but certainly possible within the laws of physics. There would be a process of change, but it is more or less analogous to when one's brain changes over the years as one goes from being a young child, to a teen, to an adult. Each month some of one's neural structure changes, but there's continuity of consciousness and no moment of death.

Is a 100-year-old the same person as when he was a 10-year-old? Perhaps not, depending how one defines the term, given how drastically he and his brain may have changed over the years. But it is clear he has not died.

In contrast to the above method of gradual neural replacement, sci-fi often depicts mind uploading where a person's brain is scanned, with the details of how there's a mechanism for seeing centimeters deep into solid material with submicron resolution tending to be left rather vague, then a copy is made, and the original brain is destroyed.

Actually, stating a copy is made isn't entirely right. Engineering and physical limitations in the real world prevent there from being any such thing as an absolutely perfect copy even with likely future technology. Every time anything is copied and manufactured, it is imperfect, even if tolerances are as tight as microns or better.

The result of such an imaginary scan and upload technique if tried in the real world is not a perfect copy. It is the creation of a new "brain" that resembles the original brain but has some errors and is different. Perhaps the quality of the imperfect reproduction is good enough that other people can't tell the difference and think of it as the same person, but it is a imperfect reproduction.

That's all the more blatant when one considers that the only known real-world method of getting submicron-level imaging of neural structure is serial sectioning, where a frozen brain is sliced apart into sections thin enough to be seen through and scanned at that resolution (or ablated away bit by bit). If one knows anything about real-world engineering, it should be apparent that any knife used for the slicing process is not going to be infinitely thin, even if a futuristic material, not if made from anything in the periodic table. That's an example of what can contribute to the imperfection of the overall reproduction.

For many means of imaging, there are physical limits, like how a light microscope is limited by the minimum wavelength of the photons and how they interact with material in their path. After all, one can't just set a microscope on a person's head and see deep into their brain, rather only seeing individual neurons when the photons can reach them without running into too much in the way; a similar situation applies with electron microscopes as well as optical ones.

Some physical limits like those may not go away however advanced the future technology.

To use a very rough analogy, a high velocity sabot round from a modern main battle tank is vastly more advanced technology than a spear of a couple thousand years ago, yet it is still made from elements in the periodic table since that's what is physically available. Sci-fi like Star Trek may depict arbitrarily advanced scanning technology, like the transporter scanning people from orbit with submicron precision. However, that has nothing to do with what is really necessarily possible, just like they depict all sorts of imaginary technology performing complex manipulation of matter at a distance with no clear mechanism (e.g. no robot arms slowly assembling people in transporters), magic-tech.

As far as is known, there are only a limited number of particles existing in real-world physics, only so many choices, whether the imaging system is gathering data by hitting the target with photons, electrons, or one of various other options. There won't necessarily be arbitrarily capable scanning technology available even in the future.

MRI provides a non-destructive means of providing a scan of a whole brain from the outside, but, at least so far, such MRI imaging is limited to a resolution of around a cubic millimeter, not remotely close to good enough even for an imperfect copy. Resolution can be far greater if MRI is used on a thin slice of material, down to the micron level, but slicing up the brain first would be getting back into the problems with destructive "scanning."

MRI, CAT scans, PET, MEG/SQUIDs ... as far as I know, there's no method really known to be capable of non-destructive whole-brain imaging to the resolution required, even with likely future advancement. (I'd be rather interested if someone can provide evidence for such by an expert on one of those methods, but I haven't ever seen much of a good, specific argument). Admittedly, future technology might be capable of that, maybe, but that may be far from guaranteed.

Some have proposed trying to get around the problem by having nanorobots travel through capillaries in the brain, to image it from the inside up close. However, if that level of nanorobot technology is available, why on earth not just perform gradual neural replacement instead?

Let's consider a couple example scenarios.

1) Person A gets his brain frozen and chopped into slices by a thin diamond-edged knife, attempting not to damage much of it in the process. The slices are scanned by electron microscopes, and a computer tries to reconstruct the neural pathways with a limited number of errors, although some errors are unavoidable in the real world even with future technology. The resulting software is put into a robot, who mostly acts like person A did.

Has person A never died? There's an entity in existence now that imperfectly resembles his personality. But is that enough? Even if appearing to be the same person to the average observer, that isn't necessarily proof of continuity of consciousness.

If one got a talented actor to undergo plastic surgery to look just like oneself, taught them for years until they emulated one's personality well enough to appear oneself even to close acquaintances, and then died ... one would still have died even if there was a person in the universe closely but imperfectly resembling one's personality.

2) Person B undergoes gradual neural replacement. As new artificial neurons join, they remap themselves with the help of the rest of his brain, like natural neurons, even if real-world errors make them not quite a perfect emulation of the neurons they replaced. Each day some of his original neurons out of a hundred billion total are lost, but a person naturally experiences a degree of that anyway. He doesn't notice the change from day to day, and he definitely doesn't die on any particular day.

Though his brain changes over the years, he clearly has continuity of consciousness, like the 10-year-old versus 100-year-old analogy mentioned before.

After the process is complete, he may have options like speeding up his artificial neurons over time or having his "brain" grow beyond normal size to become a superintelligence that is to a normal adult human mind what an adult mind is compared to a young child's mind. Whether or not he or she pursues those possibilities, he has obtained immortality beyond the limits of senescence-prone biological systems.

Choice #1 might be a reasonable last resort if there was no alternative, if gradual neural replacement wasn't available and if one was about to die or died anyway; after all, in that case one might have nothing to lose and might as well try mind uploading for an attempt at immortality. But choice #2 seems less worrisome, giving far more guaranteed continuity of consciousness.

To be safe, being not 99.9999% confident in the former, to say the least, I'd prefer to be person B rather than person A.

Gradual neural replacement seems both preferable and more likely to be technologically obtainable than sci-fi-style mind scanning and uploading.

- Singular Intellect

- Jedi Council Member

- Posts: 2392

- Joined: 2006-09-19 03:12pm

- Location: Calgary, Alberta, Canada

Based on that logic then, if you have multiple exact copies of anyone kicking around, you can freely abuse, torture and murder any number of copies to your heart's desire, so long as you leave one unharmed and intact because not one of them qualifies as as a seperate person, they are merely extensions of one person.Seggybop wrote:I think this appears so befuddling because the state of alive/dead is being perceived incorrectly. "Alive" is only relevant in the context of whether an organism is operating properly, and a specific human's identity is in constant flux.

5 minutes ago, there was a person we call Bubble Boy. At this current instant, there is also an entity we regard as Bubble Boy. However, they aren't entirely the same. The Bubble Boy of 5 minutes ago no longer exists (effectively, is dead).

"Death" is not an experience. It is an anti-experience. You do not experience death, you experience nothing. The subjective experience of a dead person, unfortunately, is null. Their experience is all in the past, the same as the experience of 5 minutes ago Bubble Boy.

That is all assuming the line of reasoning I posted earlier is the correct one.

After all, they aren't really 'dead', right? They're existing just fine in that other copy over there, all that screaming and begging you to stop are memories that no longer exist, therefore it's nothing more than just a case of amnesia.

- Seggybop

- Jedi Council Member

- Posts: 1954

- Joined: 2002-07-20 07:09pm

- Location: USA

No, each one would be a new individual, so they'd have whatever rights an individual is normally assigned. They're very similar individuals, but not identical. They diverge immediately upon receiving different input.Bubble Boy wrote:Based on that logic then, if you have multiple exact copies of anyone kicking around, you can freely abuse, torture and murder any number of copies to your heart's desire, so long as you leave one unharmed and intact because not one of them qualifies as as a seperate person, they are merely extensions of one person.

They have the same rights as any individual. The morality of doing nasty things to people if they don't remember it afterwards is a different debate.After all, they aren't really 'dead', right? They're existing just fine in that other copy over there, all that screaming and begging you to stop are memories that no longer exist, therefore it's nothing more than just a case of amnesia.

my heart is a shell of depleted uranium

- Shroom Man 777

- FUCKING DICK-STABBER!

- Posts: 21222

- Joined: 2003-05-11 08:39am

- Location: Bleeding breasts and stabbing dicks since 2003

- Contact:

If you create an exact clone of me and kill me, I'm dead. The clone isn't. But I am. And the clone is going to be pretty clonely.

It's just like that movie with two Ahnulds. There's an Ahnuld and there's an Ahnuld.

I mean, come on. If you clone me and killed me, I'm dead. It doesn't matter if the clone has all my thoughts and memories, if my clone has all my thoughts and memories yet looks like Joseph Stalin, or if my clone looks like me yet has Joseph Stalin's thoughts and memories. For ME, I'm dead. The clone might have the same body, the same thoughts and the same memories as me, but he's a different person. He's not me. I'm dead and I'm covered in bees.

It's like having a car of a particular make and model, and you squish it. Then you are given another car of that same make and model, with the same everything, even the same music CDs in the stereo, the same crumbs, everything. Is it the old car? No. The old car's squished. I'm Chinese cat food.

That analogy someone mentioned a while ago, with the whole dentist thing and the anesthesia, it's a false analogy. If the dentist cloned you and your thoughts in another body, and your original anesthetized body and brain gets destroyed - rest assured, you're NOT waking up.

The clone will wake up and think it's you, but it's not. You're dead and no one is going to know it. The clone is gonna live as you, think it's you, act it's you, it will be you. But you are dead. Dead. Dead! Deader than your dead mom!

This is why that pod people shit is so scary.

It's just like that movie with two Ahnulds. There's an Ahnuld and there's an Ahnuld.

I mean, come on. If you clone me and killed me, I'm dead. It doesn't matter if the clone has all my thoughts and memories, if my clone has all my thoughts and memories yet looks like Joseph Stalin, or if my clone looks like me yet has Joseph Stalin's thoughts and memories. For ME, I'm dead. The clone might have the same body, the same thoughts and the same memories as me, but he's a different person. He's not me. I'm dead and I'm covered in bees.

It's like having a car of a particular make and model, and you squish it. Then you are given another car of that same make and model, with the same everything, even the same music CDs in the stereo, the same crumbs, everything. Is it the old car? No. The old car's squished. I'm Chinese cat food.

That analogy someone mentioned a while ago, with the whole dentist thing and the anesthesia, it's a false analogy. If the dentist cloned you and your thoughts in another body, and your original anesthetized body and brain gets destroyed - rest assured, you're NOT waking up.

The clone will wake up and think it's you, but it's not. You're dead and no one is going to know it. The clone is gonna live as you, think it's you, act it's you, it will be you. But you are dead. Dead. Dead! Deader than your dead mom!

This is why that pod people shit is so scary.

"DO YOU WORSHIP HOMOSEXUALS?" - Curtis Saxton (source)

"DO YOU WORSHIP HOMOSEXUALS?" - Curtis Saxton (source)shroom is a lovely boy and i wont hear a bad word against him - LUSY-CHAN!

Shit! Man, I didn't think of that! It took Shroom to properly interpret the screams of dying people

Shroom, I read out the stuff you write about us. You are an endless supply of morale down here. :p - an OWS street medic

Pink Sugar Heart Attack!

- Admiral Valdemar

- Outside Context Problem

- Posts: 31572

- Joined: 2002-07-04 07:17pm

- Location: UK

The Ship of Theseus has been a philosophical conundrum for thousands of years. The application here is a little different, but as Sikon pointed out and has always been my contention, you cannot perfectly copy any physical structure that complex and call it an exact copy. Especially when micro-scale structures can make all the difference here.

Physical limits and the innate belief that any superficially perfect clone is not "you" upholds the idea that one cannot simply make a clone, copy the neural engrams to a hard disk and them download them into the new body. Shroom mentioning The Sixth Day is a good point. The pro- and antagonists undergo many cloning procedures with a memory capturing device able to read their brains post mortem for their new body to use. In several scenes there are two or more instances of any one person about. This, clearly, should tell even the less philosophically inclined viewer that a clone, even with up-to-date memories, isn't really you in the end. You can't have such a copy share your consciousness, hence not a totally perfect copy.

Physical limits and the innate belief that any superficially perfect clone is not "you" upholds the idea that one cannot simply make a clone, copy the neural engrams to a hard disk and them download them into the new body. Shroom mentioning The Sixth Day is a good point. The pro- and antagonists undergo many cloning procedures with a memory capturing device able to read their brains post mortem for their new body to use. In several scenes there are two or more instances of any one person about. This, clearly, should tell even the less philosophically inclined viewer that a clone, even with up-to-date memories, isn't really you in the end. You can't have such a copy share your consciousness, hence not a totally perfect copy.

- Starglider

- Miles Dyson

- Posts: 8709

- Joined: 2007-04-05 09:44pm

- Location: Isle of Dogs

- Contact:

I focused on operations performed after the upload, in the digital domain, because they're causally cleaner and less ambigious. However if you accept that two digital copies of the uploaded Stark are the exact same person before they diverge, then you're going to have to come up with a damn good reason why the biological Stark and uploaded Stark aren't the exact same person before they diverge. They're physically different but they have the exact same causal dependence on prior Stark history.Resinence wrote:Isn't Stark 2 a clone, not the original Stark?

Not by any reasonable definition. The five seconds or so of memories that the biological version acquired before he lost consciousness for good are a trivially small part of the 'Stark' identity.So "Stark" is dead?

It's worth outlining exactly what you're trying to do here;I'm pretty sure Stark is dead.

1) 'Sentient beings' don't exist as physical primitives in the real universe. The universe just has piles of particles (physicists would probably say something like 'mist of probability amplitudes' but it doesn't make much difference). Any concept of 'sentient being' is something that you have invented to model a kind of pattern that you've experienced in reality.

2) This is complicated by two factors; firstly you have a workable cognitive subsystem for modelling 'people' supplied to you by evolution, but this is highly informal and was built by pure trial-and-error in an environment where there was no practical distinction between 'person' and 'body'. Secondly, this is tightly connected to your reflective self-concept (also horribly fuzzy and broken due to being the output of a trial-and-error design process) and goal system (self-preservation).

3) Given sufficiently good technology there is no subjective or objective difference between this theories. Which is to say, calling an upload a 'a new individual that's a perfect clone of another individual' or 'another instance of the same individual' makes no difference to predictions you can make about objective reality or the experiences that some intelligence of a specific makeup will have. Thus this isn't a scientific debate; these models aren't distinguishable by experimental results. Choosing between them isn't a task that has predictive utility, it's purely for goal-system formulation purposes (i.e. working out what you should value).

4) However, we can still judge the 'one individual, many instances' model (actually 'similarity distance in mind space' but we'll get to that in a bit) to be better than 'every instance is a separate individual' model based on two criteria; internal consistency and complexity. The 'every body hosts a unique indivisible individual' idea sounds simple, but it isn't once you start trying to account for edge cases. When you look at more and more special cases (e.g. copying Stark uploads around in computer memory), you end up having to pile a ridiculous number of arbitrary conditionals and exceptions onto this model to make it work. And for what? Why slavishly try to rationalise the broken informal implementation of self-preservation evolution happened to lumber you with?

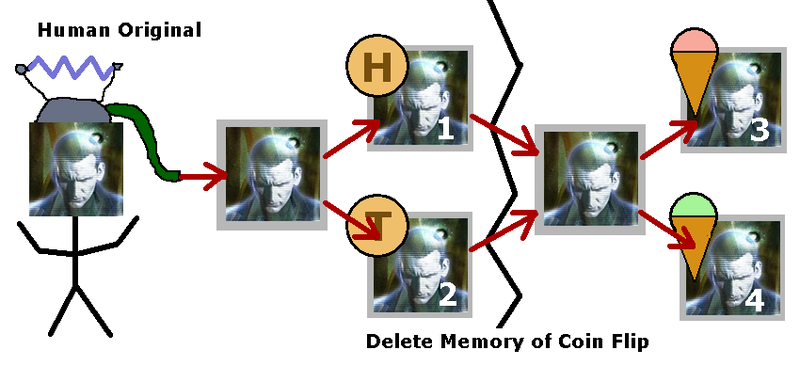

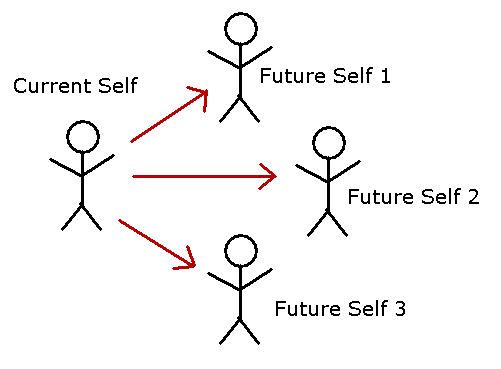

Let's look at another case that breaks the 'unitary indivisible individual' argument into fragments; the many-worlds interpretation of quantum mechanics. Now personally this strikes me as by far the simplest and most elegant explanation and quite likely to be true; people who dislike it often seem to do so for the irrational reason that it threatens their primitive little self-image. Never mind that though; given a sufficiently powerful computer, I could digitally simulate many-worlds (to some degree of branching) and run uploaded humans in it, so whether the actual universe works like this isn't critical.

First consequence; there are multiple future versions of you that all have exactly the same claim to being the 'future you'. Imagine you watch the output of a Geiger counter for three seconds. In one possible future you see no decays, in another you see one, in another you see two...

There is literally no basis for favouring any of these future yous over the others. If you can't do it for simple physical causality, what makes you think you can do it for perfect cloning? Remember, in the digital simulation I can always arrange things such that there is no effective difference between how the 'temporal causality' and the 'flash cloning' works. The only non-arbitrary thing you can key on is causal dependency, and that's the same in both cases. It gets better though, because we can also have multiple past states converging on a single current state;

It's difficult to come up with intuitive macroscale examples of this (except for experimenters inducing amnesia), but at the particle level it's easy; quantum-scale events can cancel out such that the net result is the same as if neither happened. Now a single instance of you has multiple possible pasts (the differences between which you can't remember by definition) and futures;

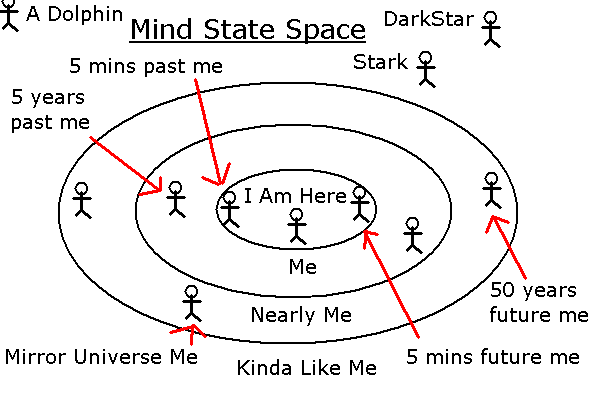

But wait. The stick-man symbol actually represents an entire universe (or rather instantaneous universe-state) here. Most people would agree that 'me' is not the same thing as 'the universe', in fact 'me' is a very very small part of 'the universe'. As such the number of possible states that a mind can have is vastly smaller than the number of possible states the universe can have, and completely identical minds exist within separate universes;

Every universe in that timeline (actually time-directed-graph) diagram is distinct, but two of them contain Starks that are absolutely identical. You have even less basis for distinguishing between these instances than you do for your future yourselves; they're exactly identical, they just have different probability distributions over likely 'future experiences'. As I illustrated in my earlier thought experiment. In actual fact it does not make sense to break it down like this; all instances of a single mind state effectively have a shared joint probability distribution over 'possible future experiences' (in many worlds just the relative frequencies of derived you-like intelligences).

In actual fact the sharp me/universe distinction is as silly and pointless as the sharp me/someone else distinction. Humans are highly time-extended and space-extended processes; it takes whole milliseconds for pulses to get from one side of the brain to the other and the absolute degree of casual interdependency is limited by the speed of light. In many worlds a hugely compex causal mesh of billions of trillions of histories exists within the space of an eyeblink (actually within the space of a single neuron firing, but you get the idea). A sharp self/not-self distinction is just delusion.

So you say you're ok with cryofreezing (if not, how the fuck are you ok with sleep)? How about I use nanomachines to freeze your brain, rearrange it to put it back into the state it was a millisecond ago, then unfreeze it, did I just kill you and create a clone that thinks it was you? How about a microsecond? How about ten minutes? How about a year?

How about reverting only half your brain? A quarter? A single neuron? How about I chop your brain in half, let the two halves think on their own for a bit, then reconnect them? How about I reconnect each to a copy of the missing half? How much brain tissue do I have to destroy to 'kill' you anyway, assuming I can keep the brain alive regardless? 10%? 50%? 90%? If I replace the tissue I destroyed with fresh tissue, at what fraction is it still 'you'?

If you're still trying to defend your precious binary self/other distinction, stop for a moment and ask yourself 'what conceivable mental process I am going to use to generate a meaningful threshold for 'you-ness'? Contrary to what religious nuts might have you believe, 'pulling it out of your ass' does not count as 'meaningful'.

Give it up. You're creating this horribly elaborate structure for no reason at all. There is a very simple model that can effortlessly answer any bizarre thought experiment you can throw at it;

Yes, there's a bit of a mismatch between what conscious experience seems to be like and what this model implies it's actually like. Get over it. There was already a vast mismatch even without looking at cloning minds; the human self-model is absolutely rife with inaccuracy and delusion. Every time you let go of a silly intuitive axiom, be it in physics or cognitive science, you're making progress towards an unbiased view of the universe.

Nope. That's better than the typical naieve attempt to come up with a secular notion of 'soul', but it's not correct. The best candidate we have for an 'instant of time' is a planck time. Human consciousness can't exist without whole milliseconds to work with (at least) and vast distances (by subatomic standards). Furthermore, a static copy of a mind isn't conscious. Clearly a useful model has to talk about causal processes.Seggybop wrote:Consciousness, whatever it is, is something that only exists in the present instant of time, and it's based on your stored memories.

Strictly true but as I've said the binary you/not-you distinction is not only hopelessly overspecific, it doesn't work even for frozen instants of time.The 'you' that anyone is at any given instant is not the same person as they were a millisecond after or before

That is correct.So, given that, creating a copy of an individual is basically the same as this default state of continual recreation/change; the only difference in this case is that instead of a linear path, the person has bifurcated into separate entities as their stored memories further diverge.

Pretty much. The Stark 5 years ago had a direct continuation and the Stark I just mind-ripped lost 5 seconds of memories, which obviously most sentients would prefer to avoid if they could, but that's it.Would Original Stark experience death? Probably, except that under these conditions, death loses any meaning-- Original Stark would be dead in the same sense that the Stark that existed 5 years ago is dead.

Yeah I know. The problem is that this part of your mind is tied right into your self-preservation drive, and that's pretty much the single strongest part of your goal system. Any attempt to tamper with it, or even think about tampering with it, pops up a deep feeling of discomfort. Usually this is good, it's what makes suicide cults rare. However in this case it's bad.Resinence wrote:Your explanation makes sense. But I still can't get past the feeling that moving a person from their brain into an artificial construct kills "them" (I sound fucking crazy) in the process.

To make a pop-culture reference, it's like the new BSG cylons being unable to think about the final five. If it's any consolation, it took me years to accept these results and I had the benefit of patient and highly intelligent people explaining it to me, plenty of time to think about it and a several years studying AI and cognitive science full-time.

We're talking about subjective experience not objective physical results. The two can be straightforwardly decoupled, and as I've pointed out there's no way to argue for maintaining that coupling without accepting the conclusion that you are in fact the whole universe. While that's not objectively wrong, it's pretty useless and not what a normal human would want out of a self-model.Bubble Boy wrote:Using his software concept, it would be like suggesting that if I kill a computer process that is running two copies of itself, I haven't actually done anything to the one process because the other is still running exactly as the killed one was.

Argument from personal incredulity, look it up.Seggybop wrote:"Death" is not an experience. It is an anti-experience. You do not experience death, you experience nothing. The subjective experience of a dead person, unfortunately, is null. Their experience is all in the past, the same as the experience of 5 minutes ago Bubble Boy.[/quite]

This is a very important point. A lot of posters here are implicitly assuming that experience continues after death in some fashion, simply by saying that 'X perceives being dead'. This is similar to people who ask 'what does the universe look like from outside'. It just doesn't work like that; subjectively, there is nothing outside of conscious experience to have a viewpoint from.

Don't be ridiculous. That's exactly equivalent to saying 'I injected someone with a long term memory blocker, then raped and abused them, which they then forgot about entirely'. Any sane human would condemn that as horrible (though there may be some debate on whether using the memory blocker made things better or worse). Utility/morality attaches directly to subjective experience; it has to, because in the long run nothing persists forever.Bubble Boy wrote:Based on that logic then, if you have multiple exact copies of anyone kicking around, you can freely abuse, torture and murder any number of copies to your heart's desire,

Shroom Man 777 wrote:I mean, come on. If you clone me and killed me, I'm dead.

- Shroom Man 777

- FUCKING DICK-STABBER!

- Posts: 21222

- Joined: 2003-05-11 08:39am

- Location: Bleeding breasts and stabbing dicks since 2003

- Contact:

But this argument is different. This argument hinges on the personal perspective of the person/mind that is subjected to cloning and then destruction.Starglider wrote:Argument from personal incredulity, look it up.

If you sedated me and copied my thoughts and memories into a clone and marked that clone's body with an X, would I wake up to find myself in a new body, with an X on my chest? No. I would wake up in my original body without an X.

If you destroy my original body and mind, I would not be waking up at all.

Now, maybe that clone would wake up and since he has my thoughts and my memories, he would be surprised with the X mark on his chest.

Now, just because my clone and I are identical does not mean that there aren't two separate distinct entities - even if the two separate distinct entities are identical in appearance, composition and thought processes.

Just because you've copied my thoughts and memories and physical attributes, does not mean you have stuck a tube in my head and siphoned me into a new body.

I am still trapped in my original body. And my body and thoughts are separate from the body and thoughts of that clone copy, who is similarly restrained.

"DO YOU WORSHIP HOMOSEXUALS?" - Curtis Saxton (source)

"DO YOU WORSHIP HOMOSEXUALS?" - Curtis Saxton (source)shroom is a lovely boy and i wont hear a bad word against him - LUSY-CHAN!

Shit! Man, I didn't think of that! It took Shroom to properly interpret the screams of dying people

Shroom, I read out the stuff you write about us. You are an endless supply of morale down here. :p - an OWS street medic

Pink Sugar Heart Attack!

- Singular Intellect

- Jedi Council Member

- Posts: 2392

- Joined: 2006-09-19 03:12pm

- Location: Calgary, Alberta, Canada

This is an extremely simple concept, and it boggles my mind that anyone could argue with it.Shroom Man 777 wrote:Now, just because my clone and I are identical does not mean that there aren't two separate distinct entities - even if the two separate distinct entities are identical in appearance, composition and thought processes.

Just because you've copied my thoughts and memories and physical attributes, does not mean you have stuck a tube in my head and siphoned me into a new body.

I am still trapped in my original body. And my body and thoughts are separate from the body and thoughts of that clone copy, who is similarly restrained.

This proposed process of immortality would seem like immortality, but only to the latest copy having been created. From his/her point of view, they've lived that entire existence of memories contained within the brain.

This arguement is practically identical to the whole Trekkies "transporters don't kill people" mentality.

- Rye

- To Mega Therion

- Posts: 12493

- Joined: 2003-03-08 07:48am

- Location: Uighur, please!

The thing that'll really "bake your noodle" to get all matrixy (disregarding the question of who on Earth bakes noodles rather than boils them) is whether or not a gradual introduction to the computer as an extension to your senses and the normal processes of your brain in preparation for storage would allow "you" to continue on.Shroom Man 777 wrote:But this argument is different. This argument hinges on the personal perspective of the person/mind that is subjected to cloning and then destruction.Starglider wrote:Argument from personal incredulity, look it up.

If you sedated me and copied my thoughts and memories into a clone and marked that clone's body with an X, would I wake up to find myself in a new body, with an X on my chest? No. I would wake up in my original body without an X.

If you destroy my original body and mind, I would not be waking up at all.

Now, maybe that clone would wake up and since he has my thoughts and my memories, he would be surprised with the X mark on his chest.

Now, just because my clone and I are identical does not mean that there aren't two separate distinct entities - even if the two separate distinct entities are identical in appearance, composition and thought processes.

Just because you've copied my thoughts and memories and physical attributes, does not mean you have stuck a tube in my head and siphoned me into a new body.

I am still trapped in my original body. And my body and thoughts are separate from the body and thoughts of that clone copy, who is similarly restrained.

I think perhaps if the process of copying into new robotic bodies was described as a more interactive process, people would grasp it as continuing on rather than "merely" being thought-cloned, though it would pretty much be the same thing (people seem to have little difficulty considering them to be themselves when they come back from unconsciousness, for instance). I think the difficulty people are having is the sensory nature of human experience. It's all well and good copying minds, but if people don't experience the process and their new brain, they will have difficult recognising themselves as a migratory part of it.

I do think, however, that without a physical connection, two separate brains with separate experiences would be different individuals. Split brain experiments show different individuals within the same person.

I don't get what's going on in those pictures at all, though.

EBC|Fucking Metal|Artist|Androgynous Sexfiend|Gozer Kvltist|

Listen to my music! http://www.soundclick.com/nihilanth

"America is, now, the most powerful and economically prosperous nation in the country." - Master of Ossus

Listen to my music! http://www.soundclick.com/nihilanth

"America is, now, the most powerful and economically prosperous nation in the country." - Master of Ossus

- Zixinus

- Emperor's Hand

- Posts: 6663

- Joined: 2007-06-19 12:48pm

- Location: In Seth the Blitzspear

- Contact:

To answer the orinal question, I would say no. A person uploaded into a computer or equilent is not the same as the one walking around in wetware.

My reasoning is semantics I guess: even if you copy the neuron-activity of the human brain, being in a computer is very much different then being in a biomechenical body.

A computer is purely digital, while a human brain has hormones that influences this behaviour.

Even if you copy or manage to perfectly emulate this behaviour, you still have the problem that the person is in a computer and not in a human body.

The human brain is very specifically "designed" to work a human body. It's base instinghts, its base motivations and underlying reflexes are that of an animal.

A computer is designed to... compute.

This very line will make the uploaded Joe different then the wetware Joe, even if the uploaded Joe feels that he is the same.

My reasoning is semantics I guess: even if you copy the neuron-activity of the human brain, being in a computer is very much different then being in a biomechenical body.

A computer is purely digital, while a human brain has hormones that influences this behaviour.

Even if you copy or manage to perfectly emulate this behaviour, you still have the problem that the person is in a computer and not in a human body.

The human brain is very specifically "designed" to work a human body. It's base instinghts, its base motivations and underlying reflexes are that of an animal.

A computer is designed to... compute.

This very line will make the uploaded Joe different then the wetware Joe, even if the uploaded Joe feels that he is the same.

Obviously depends on how much of the original brain structure is restored. Depending the degregradation of the cells, I would say, very little. The "information" stored in the brain is lost.Suppose science develops to the point where we can bring someone back from the dead, even though they've been gone say for a week. Are "you" still "you"?

Or Shep. Think what would happen if Shep were chosen.he universe could be full of indistinushable copies of Stark (or, terrifyingly, Starglider)

Credo!

Chat with me on Skype if you want to talk about writing, ideas or if you want a test-reader! PM for address.

Chat with me on Skype if you want to talk about writing, ideas or if you want a test-reader! PM for address.

-

Gigaliel

- Padawan Learner

- Posts: 171

- Joined: 2005-12-30 06:15pm

- Location: TILT

If I understand Starglider's fancy graphs, the appropriate question here is 'why'? Where are 'you' coming from, if not the processes in your brain?Shroom Man 777 wrote:I am still trapped in my original body. And my body and thoughts are separate from the body and thoughts of that clone copy, who is similarly restrained.Starglider wrote:Argument from personal incredulity, look it up.

Take your example. What if the scientist lied and painted the X on ShroomA instead of ShroomB. Switched the tanks and everything so you'd never know and there would never be any proof. This would be somewhat easier to do in a computer, obviously.

ShroomA would then go on to act as ShroomB would have and vice versa. Have their minds traded places, then, or is it a farce? What if, with the powers of mad science, we did switch their minds? Did 'you' just switch places? Both minds are identical, so is the identity now determined who has the original body? By changing the brain's wiring, did two people just die and get replaced with identical ones? What if we just switch brains? Do we need some budget to keep track of 'you'?

What if you copy the Copy and then overwrite the Original. Since we're pretending the error margins are insignificant, there is no difference. Nothing changes. Did you die despite your mind being replaced by itself?

You could pull any of these tricks thousands of times and, by the what you say, have killed thousands of people. Awaken the bodies and there is no difference.

This bring us to what I really hope is Starglider's point or I will look like a doofus-is there any real difference? Do 'you' only inhabit one mind and the other is a copy? The answer would seem to be no; 'you' are both minds at the same time. If we take a math example, it's a bit like saying Shroomy(x) = Shroomy(x) despite whatever physical location the function is operating in, assuming identical input.

So, if you decide to fake kill one and then wipe his mind to the template and then show him the recording, it'd be the same as if he -actually- died and a 'copy' saw it, as we have (hopefully) established identical mind is identical self, which is what I'm getting.