ScienceDaily wrote:The ENCODE data are rapidly becoming a fundamental resource for researchers to help understand human biology and disease. More than 100 papers using ENCODE data have been published by investigators who were not part of the ENCODE Project, but who have used the data in disease research. For example, many regions of the human genome that do not contain protein-coding genes have been associated with disease. Instead, the disease-linked genetic changes appear to occur in vast tracts of sequence between genes where ENCODE has identified many regulatory sites. Further study will be needed to understand how specific variants in these genomic areas contribute to disease.

"We were surprised that disease-linked genetic variants are not in protein-coding regions," said Mike Pazin, Ph.D., an NHGRI program director working on ENCODE. "We expect to find that many genetic changes causing a disorder are within regulatory regions, or switches, that affect how much protein is produced or when the protein is produced, rather than affecting the structure of the protein itself. The medical condition will occur because the gene is aberrantly turned on or turned off or abnormal amounts of the protein are made. Far from being junk DNA, this regulatory DNA clearly makes important contributions to human health and disease."

Much Less Junk DNA Than Previously Thought

Moderator: Alyrium Denryle

-

amigocabal

- Jedi Knight

- Posts: 854

- Joined: 2012-05-15 04:05pm

Much Less Junk DNA Than Previously Thought

It seems that much of the DNA researchers once thought of as junk DNA is not so worthless.

- Guardsman Bass

- Cowardly Codfish

- Posts: 9281

- Joined: 2002-07-07 12:01am

- Location: Beneath the Deepest Sea

Re: Much Less Junk DNA Than Previously Thought

There's a good, longish-article at Ars Technica about it, including some concerns with the results:

Ars Technica wrote:

The completion of the human genome, rather than being the huge breakthrough it was presented as, raised almost as many questions as it answered. Less than two percent of it encoded a protein, and only about five percent ended up being conserved relative to many of our fellow mammals. The rest of it seemed like a bit of a mess—damaged viruses, long stretches of repetitive sequence, and huge stretches devoid of any genes.

The ENCODE project was formed to make sense of that mess. A huge consortium of labs, ENCODE started performing a massive assay of pretty much everything to do with DNA: where proteins stuck to it, how it was packaged, how it was modified, etc. Now, the consortium is out with a massive number of papers—six alone in today's Nature, and dozens to follow in the days to come, scattered through many different journals. The results are staggering: over 1,500 different types of data, assayed across the whole genome, from 147 different cell types. It will feed researchers for years to come.

And it suggests that more of the chaos in the genome may be useful, although that suggestion comes with some big caveats.

First, the data. The researchers used a huge variety of techniques to look at just about anything that can happen to the DNA inside a cell. They took 119 proteins that bind to DNA and found every single site in the genome that they stuck to. They looked for signs of a chemical modification of DNA called methylation that can change the expression of genes nearby. The location of the proteins that structure chromosomes, called histones, were also checked, and areas where the chromosome structure is relatively accessible were pinned down. Regions of the chromosomes that are close to each other inside a cell were mapped. Every single RNA within a cell was sequenced and resequenced.

Once a single cell type, like liver cells, was checked, the authors moved on to look at another. And another. The end result was 1,640 different data sets, covering a total of nearly 150 different cell types. It's a staggering amount of work; calling ENCODE the Large Genome Collider would provide a real sense of the scale of this effort.

Functional or not?

What does it tell us about the genome? Some interesting things, although they're a bit tough to interpret. With data on this scale, we can't actually look at each piece of data on every piece of DNA. So we have to define some generic definitions of things like function: an RNA is made there, a protein binds there, etc. ENCODE chose to go extremely broad: "Operationally, we define a functional element as a discrete genome segment that encodes a defined product (for example, protein or non-coding RNA) or displays a reproducible biochemical signature (for example, protein binding, or a specific chromatin structure)." Basically, if anything came out of any of these assays regarding a base, it was considered functional.

And by that definition, most of the genome is doing something. The figure was about 80 percent in these assays, and by adding more cell types, the authors suggest we could get the number even higher.

But there are some problems with that figure. For instance, many of the non-functional viruses and mobile genetic elements will still contain the protein binding sites that used to be critical to their function. So even though these will be traditionally classified as "junk DNA," they'll be considered functional under the ENCODE definition. More generally, proteins that bind DNA are not very picky about their binding sites, so they will create some indication of function by accident; they can also lead to RNA production nearby, looping other bases into an apparent function.

That said, the study does provide some solid indications that more of the genome is doing useful stuff than many previous studies have estimated. More stringent criteria can be used—multiple proteins in close proximity, an accessible chromosome configuration, etc. These reduce the percentage of the genome that is likely to be doing something biologically useful down to about 10 percent—which is lower, but still a lot higher than the five percent that is conserved throughout mammalian evolution.

The authors mention a bit about where that additional five percent might come from. Some of it appears to be primate specific, in that it's common to primates but not found in other mammals. That hints that it might be doing something specific in our own lineage. Further evidence comes from the project to sequence 1,000 human genomes, which suggests some of these sequences are doing human-specific things.

Although the 80 percent figure doesn't really fit my definition of functional, the authors do present a compelling case that more of the genome is doing something useful than we previously had evidence for.

One of the other things the authors look at is all the human genetic differences that have been associated with diseases. Most of these haven't been located in genes, but the authors find they're enriched in the sequences that have met some definition of "functional." And, if you expand to look for things that are only near something that has been identified by ENCODE (rather than right at a location), the number goes up further still.

There are probably many cases where this involves a real and significant association between a change and human disease. But it's also probable that many of these are false positives, given the very high frequency of sites identified as giving a positive signal in at least some ENCODE assays.

In the long term, that could be a significant problem. ENCODE is mostly providing a resource for other researchers to understand the things they're studying, be it DNA binding proteins or human diseases. With 1,640 assays in 147 cell types, it will be very easy for them to search the ENCODE data and find a single assay that tells them what they'd like to see.

This danger is highlighted by the fact that the percentage of the genome flagged as functional is already at 80 percent, and the authors suggest that the number will go higher when they look at additional cell types and DNA binding proteins. If it reaches 100 percent (something that an ENCODE researcher has already suggested), then it will be very difficult to not come home with a positive result whenever you search the ENCODE genome data.

All of this means that ENCODE will be a tool that has to be used very carefully, and things that come out of it should be checked in detail. Still, it's a potentially powerful tool, since it provides so much information about so many different genes and cell types. Biologists will undoubtedly end up enjoying having it as much as they've enjoyed having the genome sequence in the first place.

“It is possible to commit no mistakes and still lose. That is not a weakness. That is life.”

-Jean-Luc Picard

"Men are afraid that women will laugh at them. Women are afraid that men will kill them."

-Margaret Atwood

-Jean-Luc Picard

"Men are afraid that women will laugh at them. Women are afraid that men will kill them."

-Margaret Atwood

- Ziggy Stardust

- Sith Devotee

- Posts: 3114

- Joined: 2006-09-10 10:16pm

- Location: Research Triangle, NC

Re: Much Less Junk DNA Than Previously Thought

The difficulty with accurately testing these portions of the DNA is that most of the functions may be epigenetic. That is, they are regulatory in some fashion, and are turned "on" and "off" by a complicated network of stimuli, whether or not some other section is activated, etc. etc. It can get very complicated very fast when you try to unravel even relatively simple protein building genes - there will be sections of DNA thousands and thousands of base pairs up or down stream that are directly impacting the expression of that particular gene, not to mention the presence or absence of certain methyl groups or histones, paramutation, genomic imprinting, which chromosome the DNA is on to begin with, how tightly wound the DNA is on the chromosome (which affects which regulatory factors can have physical access to certain stretches of the code), transvection, the phenotype/genotype of the parents, and don't even get me started on how friggin' complicated prion interactions can be.

- His Divine Shadow

- Commence Primary Ignition

- Posts: 12791

- Joined: 2002-07-03 07:22am

- Location: Finland, west coast

Re: Much Less Junk DNA Than Previously Thought

As a sidenote, I believe this was predicted by Dawkins in The Selfish Gene back when it was written.

Those who beat their swords into plowshares will plow for those who did not.

- kc8tbe

- Padawan Learner

- Posts: 150

- Joined: 2005-02-05 12:58pm

- Location: Cincinnati, OH

Re: Much Less Junk DNA Than Previously Thought

I don't think this is terribly suprising to many in the field of genetics, but it's nice to see it finally published. Unfortunately, it makes the $1,000 genome a bit less attainable for now.

- The Grim Squeaker

- Emperor's Hand

- Posts: 10319

- Joined: 2005-06-01 01:44am

- Location: A different time-space Continuum

- Contact:

Re: Much Less Junk DNA Than Previously Thought

It depends what you're looking for, and how well you know WHAT you want to look for. For various abberrant mutations that can be detected on the level of +- exons, this doesn't bother them at all. (Which would probably remain the [cursory] level of analysis for public health for a very long time anyway).kc8tbe wrote:I don't think this is terribly suprising to many in the field of genetics, but it's nice to see it finally published. Unfortunately, it makes the $1,000 genome a bit less attainable for now.

Photography

Genius is always allowed some leeway, once the hammer has been pried from its hands and the blood has been cleaned up.

To improve is to change; to be perfect is to change often.

Genius is always allowed some leeway, once the hammer has been pried from its hands and the blood has been cleaned up.

To improve is to change; to be perfect is to change often.

- Alyrium Denryle

- Minister of Sin

- Posts: 22224

- Joined: 2002-07-11 08:34pm

- Location: The Deep Desert

- Contact:

Re: Much Less Junk DNA Than Previously Thought

....

No. Seriously

....

Since when has this been an issue? What the fuck have developmental and functional geneticists been doing these past 12 years, sitting around with their thumps up the cloacas of their Xenopus laevis?

Oh wait. No. They actually had the whole "junk DNA is not junk. It is instead regulatory and structural elements" down for some time now. Have the whole-scale genomics people just ignored them? Oh wait. Yes.

Sorry

No. Seriously

....

Since when has this been an issue? What the fuck have developmental and functional geneticists been doing these past 12 years, sitting around with their thumps up the cloacas of their Xenopus laevis?

Oh wait. No. They actually had the whole "junk DNA is not junk. It is instead regulatory and structural elements" down for some time now. Have the whole-scale genomics people just ignored them? Oh wait. Yes.

Sorry

GALE Force Biological Agent/

BOTM/Great Dolphin Conspiracy/

Entomology and Evolutionary Biology Subdirector:SD.net Dept. of Biological Sciences

There is Grandeur in the View of Life; it fills me with a Deep Wonder, and Intense Cynicism.

Factio republicanum delenda est

BOTM/Great Dolphin Conspiracy/

Entomology and Evolutionary Biology Subdirector:SD.net Dept. of Biological Sciences

There is Grandeur in the View of Life; it fills me with a Deep Wonder, and Intense Cynicism.

Factio republicanum delenda est

- Memnon

- Padawan Learner

- Posts: 211

- Joined: 2009-06-08 08:23pm

Re: Much Less Junk DNA Than Previously Thought

I'm not sure how serious this post is, but I'll go ahead and post some background anyways in case someone thinks genomics people are slacking... http://www.modencode.org/ is an example of such whole-scale genomics epigenetic mapping. Work on these projects takes a long ass time because you have to validate everything to make sure that you have reference-quality data. And, as Ziggy said, it gets complicated -- you need to use many different tests to get at the data. Even drosophila melanogaster and c elegans, with a lot smaller genomes, still took a couple of years. It's a testament to the power of human research grant foundations like HHMI, imo, that this one was so fast.Alyrium Denryle wrote:....

Oh wait. No. They actually had the whole "junk DNA is not junk. It is instead regulatory and structural elements" down for some time now. Have the whole-scale genomics people just ignored them? Oh wait. Yes.

Sorry

Are you accusing me of not having a viable magnetic field? - Masaq' Hub, Look to Windward

- Terralthra

- Requiescat in Pace

- Posts: 4741

- Joined: 2007-10-05 09:55pm

- Location: San Francisco, California, United States

Re: Much Less Junk DNA Than Previously Thought

A much more in-depth analysis about how the ENCODE report is less than usefully accurate:

John Timmer at Ars Technica wrote:Most of what you read was wrong: how press releases rewrote scientific history

Repeating myths may make good stories, but it breeds confusion. See the ENCODE news.

This week, the ENCODE project released the results of its latest attempt to catalog all the activities associated with the human genome. Although we've had the sequence of bases that comprise the genome for over a decade, there were still many questions about what a lot of those bases do when inside a cell. ENCODE is a large consortium of labs dedicated to helping sort that out by identifying everything they can about the genome: what proteins stick to it and where, which pieces interact, what bases pick up chemical modifications, and so on. What the studies can't generally do, however, is figure out the biological consequences of these activities, which will require additional work.

Yet the third sentence of the lead ENCODE paper contains an eye-catching figure that ended up being reported widely: "These data enabled us to assign biochemical functions for 80 percent of the genome." Unfortunately, the significance of that statement hinged on a much less widely reported item: the definition of "biochemical function" used by the authors.

This was more than a matter of semantics. Many press reports that resulted painted an entirely fictitious history of biology's past, along with a misleading picture of its present. As a result, the public that relied on those press reports now has a completely mistaken view of our current state of knowledge (this happens to be the exact opposite of what journalism is intended to accomplish). But you can't entirely blame the press in this case. They were egged on by the journals and university press offices that promoted the work—and, in some cases, the scientists themselves.

To understand why, we'll need a bit of biology and a bit of history before we can turn back to the latest results and the public response to them.

What we know about DNA, and when we knew it

Among other things, DNA has at least two key functions. First, it codes for the proteins that perform most of a cell's functions. Second, it has control sequences that don't encode anything, but determine when and where the coding sequences are active. We've had some indication that non-coding DNA played key regulatory roles since the 1960s, when the Lac operon was described and won its discoverers the Nobel Prize.

The Lac operon is present in bacterial genomes, which are under extreme pressure to carry as little DNA as possible. The typical bacterial genome is over 85 percent protein-coding DNA, leaving just a small fraction for regulatory purposes. But that isn't generally true of vertebrates.

The coding portions of vertebrate genes turned out to be interrupted by noncoding regions, called introns. Some of these are huge—roughly a third the size of some of the smaller bacterial genomes. Vertebrate genomes also appeared to be littered with old and disabled viruses and mobile genetic parasites called transposons. Even some of the coding portions seemed a bit useless—near exact duplicates of genes were common, as were mutated and disabled copies. Many of these apparently useless pieces of DNA continued to carry sites for regulatory DNA binding proteins and continued to make RNA.

(To give you an idea of how mainstream all this was, I spent some time working on a mouse gene that was thought to be superfluous because it was a near-exact copy of a gene used by the immune system. But the copy was only expressed in males because a mobile genetic element's regulatory sequences had been inserted nearby. And I knew all this as an undergrad in the late 1980s).

By the time we sequenced the human genome, we discovered that this seemingly useless stuff was the majority. Over half the genome was built from the remains of viruses and transposons. Introns accounted for another large fraction. And all of it seemed to be an evolutionary accident. One fish, the fugu, lacks a lot of this DNA, and seems to get along fine, while many salamanders have ten times the DNA per cell that humans do. And if you looked at the DNA of different mammals, the vast majority of it (about 95 percent) wasn't shared by different species.

These findings seemed to support a model that was first proposed back in the 1970s, which picked up the (possibly unfortunate) moniker junk DNA. Genomic accidents—duplicating genes, picking up a virus—happen at a steady rate. Individually, these don't cause an appreciable cost in terms of fitness, so species aren't under a strong selective pressure to get rid of it, and pieces could linger in the genome for millions of years. But the typical bit of junk doesn't do anything positive for the animals that carry it.

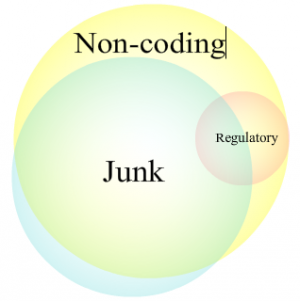

Regulatory, junk, and non-coding DNA are all partly overlapping categories, which helps foster confusion. (Circles not to scale.)

You could even consider the idea of junk DNA to be a scientific hypothesis. It notes that animal genomes experience several processes that produce superfluous bits of DNA, predicts that these will not cause enough harm to be selected for elimination, and proposes an outcome: genomes littered with random bits of history that have no impact on an organism's fitness.

Junk dies a thousand deaths

For decades we've known a few things: some pieces of non-coding DNA were critically important, since they controlled when and where the coding pieces were used; but there was a lot of other non-coding DNA and a good hypothesis, junk DNA, to explain why it was there.

Unfortunately, things like well-established facts make for a lousy story. So instead, the press has often turned to myths, aided and abetted by the university press offices and scientists that should have been helping to make sure they produced an accurate story.

Discovery of new regulatory DNA isn't usually surprising, given that we've known it's out there for decades. There has been a steady stream of press releases that act as if finding a function for non-coding DNA is a complete surprise. And many of these are accompanied by quotes from scientists that support this false narrative.

The same thing goes for junk DNA. We've known for decades that some individual pieces of junk DNA do something useful. Introns can regulate gene expression. Bits of former virus or transposon have been found incorporated into genes or used to regulate their expression. So some junk DNA can be useful, in much the same way that a junk yard can be a valuable source of spare parts.

But it's important to keep these in perspective. Even if a function is assigned to a piece of junk that's 1,000 base pairs long, that only accounts for about 1/2,250,000 of the total junk that is estimated to reside in the human genome. Put another way, it's important not to fall into the logical fallacy that finding a use for one piece of junk must mean that all of it is useful.

Despite that, many new findings in this area are accompanied by some variation on the declaration that junk is dead. Both press officers and scientists have presented a single useful piece of virus as definitively establishing that every virus, transposon, and dead gene in the human genome is essential for our collective health and survival.

A bad precedent, repeated

This brings us to the ENCODE project, which was set up to provide a comprehensive look at how the human genome behaves inside cells. Back in 2007, the consortium published its first results after having surveyed one percent of the human genome, and the results foreshadowed this past week's events. The first work largely looked at what parts of the genome were made into RNA, a key carrier of genetic information. But the ENCODE press materials performed a sleight-of-hand, indicating that anything made into RNA must have a noticeable impact on the organism: "the genome contains very little unused sequences; genes are just one of many types of DNA sequences that have a functional impact."

There was a small problem with this: we already knew it probably wasn't true. Transposons and dead viruses both produce RNAs that have no known function, and may be harmful in some contexts. So do copies of genes that are mutated into uselessness. If that weren't enough, just a few weeks later, researchers reported that genes that are otherwise shut down often produce short pieces of RNA that are then immediately digested.

So even as the paper was released, we already knew the ENCODE definition of "functional impact" was, at best, broad to the point of being meaningless. At worst, it was actively misleading.

But because these releases are such an important part of framing the discussion that follows in the popular press, the resulting coverage reflected ENCODE's spin on its results. If it was functional, it couldn't be junk. The concept of junk DNA was declared dead far and wide, all based on a set of findings that were perfectly consistent with it.

Four years later, ENCODE apparently decided to kill it again.

A new definition, the same problem

In the lead paper of a series of 30 released this week, the ENCODE team decided to redefine "functional." Instead of RNA, its new definition was more DNA focused, and included sequences that display "a reproducible biochemical signature (for example, protein binding, or a specific chromatin structure)." In other words, if a protein sticks there or the DNA isn't packaged too tightly to be used, then it was functional.

That definition nicely encompasses the valuable regulatory DNA, which controls nearby genes through the proteins that stick to it. But—and this is critical—it also encompasses junk DNA. Viruses and transposons have regulatory DNA to ensure they're active; genes can pick up mutations in their coding sequence that leave their regulatory DNA intact. In short, junk DNA would be expected to include some regulatory DNA, and thus appear functional by ENCODE's definition.

By using that definition, the ENCODE project has essentially termed junk DNA functional by fiat—for the second time. And perhaps more significantly, they did so two paragraphs after declaring that their results showed 80 percent of the genome was functional.

The response has been about as unfortunate as you might predict. The Nature press materials announcing the paper proclaim, "Far from being junk, the vast majority of our DNA participates in at least one biochemical event in at least one cell type." The European Molecular Biology Laboratory's release said that the work "revealed that much of what has been called 'junk DNA' in the human genome is actually a massive control panel with millions of switches regulating the activity of our genes." MIT's was entitled "Researchers identify biochemical functions for most of the human genome."

But this can't simply be blamed on the PR staff. Scientists from the National Human Genome Research Institute have been fostering the confusion. One of its program directors was quoted by UC Santa Cruz as suggesting we thought regulatory DNA was junk: "Far from being 'junk' DNA, this regulatory DNA clearly makes important contributions to human disease." And its director, Eric Green, was quoted by Penn State as saying, "we can now say that there is very little, if any, junk DNA."

This confusion leaked over to the popular press. Bloomberg's coverage, for example, suggests we've never discovered regulatory DNA: "Scientists previously thought that only genes, small pieces of DNA that make up about 1 percent of the genome, have a function." The New York Times defined junk as "parts of the DNA that are not actual genes containing instructions for proteins." Beyond that sort of fundamental confusion, almost every report in the mainstream press mentioned the 80 percent functional figure, but none I saw spent time providing the key context about how functional had been defined.

The part in which we concludeinset wrote:SOME BRIGHT SPOTS

Not everything was awful. Most of the press releases, while often repeating the 80 percent figure without context, didn't contain any egregious errors. Some were exemplary. The Wellcome Trust's Sanger Center produced a release that focused on its findings on disabled genes, some of which have evolved new functions. But it is explicit about stating that "some" only constitutes nine percent. The publisher, BMC, accurately calls these dead genes "stretches of fossil DNA, evolutionary relics of the biological past," and cautiously suggests that only "a number of pseudogenes may be active."

Ed Yong's blog post on the topic spent several paragraphs diving in to how "functional" was defined, and has tracked some of the negative feedback that scientists had to the publicity. These have included Princeton's Leonid Kruglyak, who has voiced his displeasure via Twitter, and T. Ryan Gregory, who despite his standing interest in junk DNA, expressed frustration with ENCODE on his blog. There are also good takes on the problems at the blog of biochemists Mike White and Steve Mount.

Even Ewan Birney, an ENCODE team member who was widely quoted as hyping the significance of the 80 percent figure, published a fairly nuanced discussion of functionality on his personal blog. And Nature's Brendan Maher, whose parent organization produced some of the problematic press materials, has done an excellent job of tracking the ensuing controversy.

ENCODE remains a phenomenally successful effort, one that will continue to pay dividends by accelerating basic science research for decades to come. And the issue of what constitutes junk DNA is likely to remain controversial—I expect we'll continue to find more individual pieces of it that perform useful functions, but the majority will remain evolutionary baggage that doesn't do enough harm for us to eliminate it. Since neither issue is likely to go away, it would probably be worth our time to consider how we might prevent a mess like this from happening again.

The ENCODE team itself bears a particular responsibility here. The scientists themselves should have known what the most critical part of the story was—the definition of "functional" and all the nuance and caveats involved in that—and made sure the press officers understood it. Those press officers knew they would play a key role in shaping the resulting coverage, and should have made sure they got this right. The team has now failed to do this twice.

More generally, the differences among non-coding DNA, regulatory DNA, and junk DNA aren't really that hard to get straight. And there's no excuse for pretending that things we've known for decades are a complete surprise.

Unfortunately, this is a case where scientists themselves get these details wrong very often, and their mistakes have been magnified by the press releases and coverage that has resulted. That makes it much more likely that any future coverage of these topics will repeat the past errors.

If the confused coverage of ENCODE has done anything positive, it has provoked a public response by a number of scientists. Their criticisms may help convince their colleagues to be more circumspect in the future. And maybe a few more reporters will be aware that this is an area of genuine controversy, and it will help them identify a few of the scientists they should be talking to when covering it in the future.

It's just a shame the public had to be badly misled in the process.