Simon_Jester wrote:even in the presence of safeguards to stop them doing anything obviously menacing like "increase my access to hardware by a factor of 1000"

How would you even write such a safeguard? A (nontrivial) neural network is pretty much a black box that accepts inputs and generates actions. All you can do is deploy the usual counter-intrusion tools to look for suspicious network activity etc. Nobody actually does this, and even if they did nearly all commercial apps and probably most research projects would say 'we can't shut down this vital and important system just because you got something slightly anomolous on the firewall! We'd loose millions of revenue / grants'.

And, thinking about it, as soon as your machine learning approach learns to rewrite itself at all, it's probably going to find a lot of inefficiencies to correct. Either because a human wrote it, or because the "blind idiot god" of competition wrote it in the context of a genetic algorithm. So, yeah, could get a lot smarter and potentially nastier in a very big hurry.

Yes. Humans aren't particularly good at programming, as evidenced by the constant bugs in everything and the massive quality differential between average (awful) and expert (merely bad) programmers. This is on top of NNs being horribly inefficient use of the hardware, although there are quite a few people who would disagree because they seriously believe that the way the human brain does general intelligence is the only way to do it.

Given that I'm describing a machine that can make itself smarter without any conscious understanding of how or why it is doing so...

The conscious vs unconscious distinction is pretty much a human quirk. It's an artifact of the way that the global correlation, symbolic reasoning and attentional mechanisms work in humans. NN systems may have something equivalent if the designers just blindly try to reproduce human characteristics, but in general AI will not have it. There is just the sophistication of the available models and the amount of compute effort devoted to different cognitive tasks, both continuous (indeed, multidimensional) parameters.

In a savage burst of anthropomorphism I'm picturing the AI equivalent of trying to 'better oneself' by reading piles of self-help books and contemplating one's navel. Which loops amusingly back to the topic of the OP, I suppose.

Well yes but they would be books on programming, information theory, decision theory, statistics, physics, simulation design, that kind of thing. A lot of human self-help is to do with human emotions and in particular the brain's motivational mechanism, which isn't really applicable.

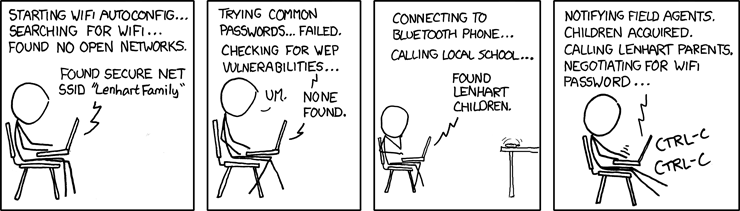

So, self-modifying code that is already explicitly designed to seek out software vulnerabilities and presumably gets its own internal equivalent of a cookie every time it learns how to hack somebody's computer and reports what it's learned to Master.

Well, I've heard of a few people playing around with GP for this application, but I'm not aware of any successful applications of it. There are lots of existing automated cracking tools but they work by exhaustively trying the set of all known exploits (parameterised) or at best heuristically searching for known antipatterns on a slightly abstracted model of the target code. I mean, there is automation in the sense that once an injection vector has been indentified you can quickly build a full rootkit based on it, but not in the sense of finding an entirely novel type of exploit autonomously.

To a certain extent this is because there is so much low hanging fruit. Existing approaches are good enough that state level actors can hack pretty much anything they like. Organised crime doesn't have much trouble stealing as many credit card numbers as they can use with quite simple attacks. If security continues to improve and the easy exploits go away, then eventually attackers will be pushed to use more sophisticated techniques to find new ones.

The best case scenario I can imagine is that the machine doesn't totally lose the plot, and only winds up forcibly inserting itself on the entire Internet via aforesaid security weaknesses, finds all the security loopholes, then stalls out or somehow crashes because despite being smart enough to do that, and having vastly increased its potential by going viral... It still somehow isn't smart enough to come up with anything really outre like "socially engineer myself into a position where all resources of civilization are dedicated to making new OSes for me to find hacks for."... Said program might end up smart only in the sense that an ant colony is smart, despite having massively intruded on everyone's lives in an incredibly inconvenient fashion.

Exactly: there is quite a high chance that the exponential scenario for this technology is just bricking every single internet connected device (by devoting every resource to finding ways to spread to new systems, and/or running some payload like bitcoin mining). It would be quite humorous and not entirely implausible, if rather disasterous, if a crazed Bitcoin enthusiast took out the entire Internet with a self-improving worm in this fashion. There is just no way existing security mechanisms could keep up once it got to a certain level of sophistication and spread. Kind of like the online-only grey goo scenario. I actually mention this as a worst case for self-improving AI to people who would write off anything physical as hollywood alarmism. Near total loss of the Internet should be bad enough to get people concerned, but denial is still the most common response.

Am I missing anything here? Is there a better best case scenario?

Well sure, it could be developed by a white hat security company and just proactively fixes all software it can reach and stays around blocking attacks, while consuming say 10% of all CPU and bandwidth across every Internet connected device. That would be an interesting class action lawsuit, 'your virus is actually doing us all a lot of good but we're still going to sue you since it was unauthorised dissemination'.

Of course any survivable incident like this would just amp up AI research even if publically governments are pretending to try and regulate it down. Dangerous and useful go hand in hand. Non-proliferation isn't an option for something you can research on any standard computer, given the right skillset.